The market will resolve yes if gpt2-chatbot turns out to be OpenAI's next-generation model such as GPT-4.5 or GPT-5. If the model is just a new version of GPT-4, then the market will resolve No.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ5,815 | |

| 2 | Ṁ556 | |

| 3 | Ṁ531 | |

| 4 | Ṁ522 | |

| 5 | Ṁ232 |

People are also trading

@traders i think gpt2-chatbot is both a variation of gpt-4 and openai’s next generation model - should i resolve N/A or 50%

@Soli If it is not continue trained on GPT4 nor training from scratch but follows GPT4's recipe. At the same time, it demonstrated noticeable improvements in capabilities.

Can gpt-4o be considered openai's next-generation model (e.g. 4.5 or 5)?

No, because

intelligence leap not comparable to gpt-3 to gpt-4 jump

various metrics show smaller improvement

incremental rather than revolutionary advancement

lack of official next-gen designation from openai

announced "frontier models" coming soon at end of presentation

model's efficiency and speed suggest it's not dramatically larger

4 in name implies iteration on gpt-4, not new generation

Yes, because

next-gen status is not solely determined by model size:

llama 3 8b is considered next-gen despite smaller size

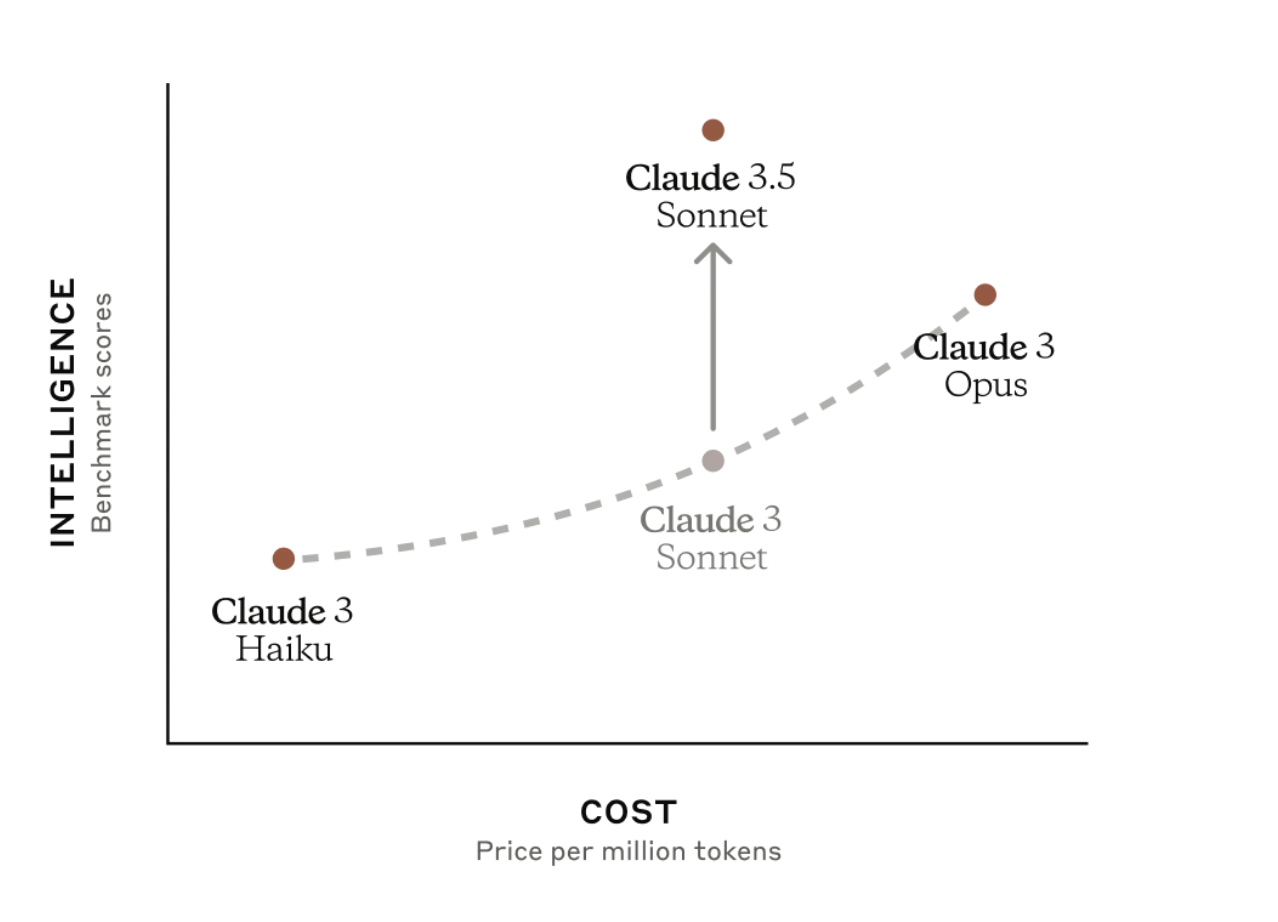

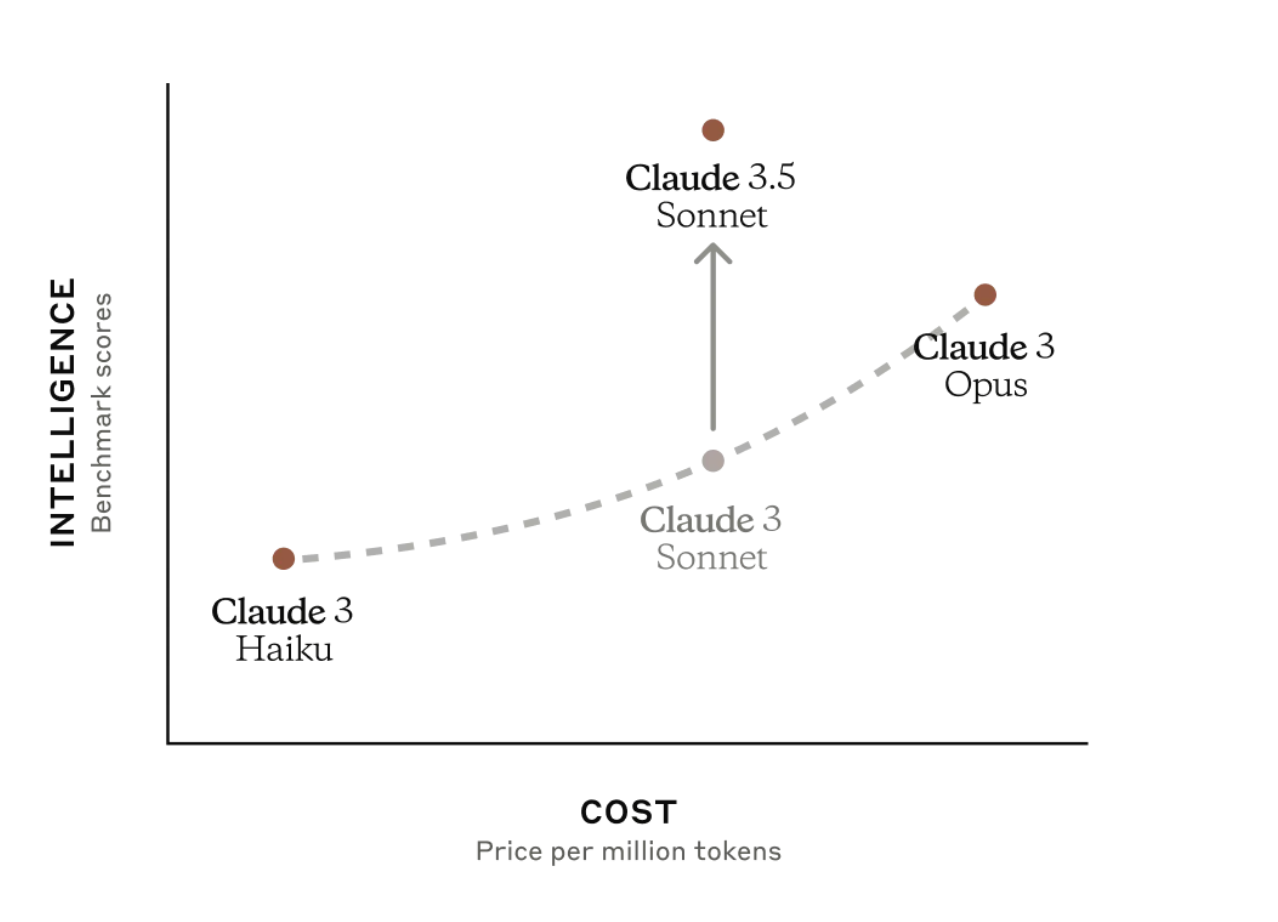

anthropic's claude 3.5 sonnet is next-gen and most intelligent, but smaller than opus 3

industry trends shifting towards viewing model families rather than strict generational leaps

significant capabilities and advancements:

multimodal integration: text, audio, and vision capabilities

improved efficiency and lower operational costs

not merely a fine-tuned version of gpt-4, but architecturally distinct

released through a major event:

launch signifies importance and marks a significant milestone

demonstrates openai's commitment to positioning it as a major advancement

Ultimately, there's no right or wrong way to answer this question. At the end of the day, these are all marketing terms. However, based on everything I listed above, I personally think of GPT-4O as next-generation. While it doesn't represent as dramatic a leap as GPT-3 to GPT-4, it introduces significant new capabilities and efficiencies. It's likely more of a 0.5 jump than a full generational leap, aligning with the recent release of Claude 3.5 Sonnet. It seems that moving forward a nuanced view of generations is needed. You can get a new generation of a small model. "Next-generation" might not always mean vastly larger or more intelligent, but rather more capable, efficient, or versatile. GPT-4O embodies this trend, making a strong case for its consideration as a next-gen model despite counterarguments.

@traders i will resolve this question in 24 hours if no one has strong objections. i lean towards gpt-4o is openai’s next-gen model. it’s not the largest, and openai will probably release a bigger model this year. but i think 4o and any new model would be part of the same family, like claude 3 with opus and sonnet, or llama 3 with the 70B and 400B models. gpt-4o is more efficient, cheaper, supports new modalities, isn’t just a fine-tuned version of gpt-4, and was announced through a major event.

I think a 50% resolution would make sense, given the ambiguity. But YES would also be reasonable.

I don't think we can safely say the next model will be part of the same family; in fact, I wouldn't be surprised if the decision to call it "4o" was because they expect 5 to be much more clearly "next-gen" and don't want to dilute the brand implications of a new number. They've also heavily downplayed it in the marketing, framing it as just a cheap knockoff GPT-4.

OTOH, it is better than 4 on most metrics. And with Anthropic putting out a very similar model in Claude 3.5 Sonnet, we kind of have a "generation" to lump together. It's clearly not a 3-to-4 level jump, but a 3-to-3.5 level jump? Plausibly.

@Soli resolve how you like, but FWIW I don't expect the next big model to be "part of the same family" in that sense. If that were likely, I don't think this one would have been marketed with the "GPT-4" branding. That would be very confusing, if a "GPT-4 Ultra" came out and we were supposed to interpret "4o" and "4 Ultra" as part of the same, new family but not 4 Turbo.

Obviously upcoming models are likely to incorporate approaches learned developing previous models, so there will be tech in common, and to some degree "families" of models are a marketing thing. But I think that if OpenAI release a bigger, more powerful model, if it comes in multiple sizes, GPT-4o won't be one of th sizes. I'd think they would have marketed it as so at launch if that was what they were planning.

@chrisjbillington how do you feel about this decision now that some time has passed and openai released 4o-mini? does the family argument now make more sense?

@Soli I haven't read any news and didn't know they'd released a GPT-4o mini, but I'd assumed we were talking about GPT-4o being the mini version of a future larger model, and this isn't that. Releasing a smaller version of whatever their latest models are wouldn't be that surprising at any point, it doesn't seem like it suggests much.

With hindsight my view hasn't changed, no.

duplicate

OpenAI has made it clear that GPT-4.5/GPT-5/{whatever they're gonna call it} is coming later this year. That will be the next generation after GPT-4.

GPT-4o is called GPT-4 and is just at the level of an updated GPT-4.

So, if we're following the resolution criteria, NO seems to be obviously correct.

Well, that's the logic I used to resolve my market. But nobody seemed happy about my decision 😆

P.S. I do think it is a new model in a sense that GPT-4-turbo was not. And it might even be an aborted version of what will become GPT-5. But (turn your big brain on) it having next-generation model architecture is different to it actually being a next generation model. Once you understand that, any lingering doubts about resolving NO ought dissipate.

@traders i think gpt2-chatbot is both a variation of gpt-4 and openai’s next generation model - should i resolve N/A or 50%

@Soli Honestly I think all these questions should probably have been resolved N/A, given the ambiguity.

Resolving to 50% would be bad for those of us who bet NO, which would suck for me personally (I've really taken a beating over this whole thing), but it's defensible.

@MugaSofer I am open to resolving this as N/A. Out of curiosity, which other markets are you referring to?

@Soli Actually, re-checking, I think it was pretty much just https://manifold.markets/jim/is-gpt2chatbot-gpt5?r=TXVnYVNvZmVy. Felt like more in the chaos of the day.

(I bet 2k YES there; managed to sell before resolution but still lost about 1.5k. I then bet NO here as an attempt to recoup losses, on the assumption it would resolve the same, but that's 100% not your fault.)

@Soli It looks like the other major markets with wording along these lines were by @Joshua and resolved YES! So of the three market-makers, we've got one resolving NO, one resolving YES, and one somewhere in-between. sigh

I think that reinforces that you're right; it's really too ambiguous for a definitive resolution.

@MugaSofer I would be slightly annoyed if i were you though haha 😅. Let's wait for other participants in the market to share their opinions before we reach a conclusion. It is chaos when this happens but it is also why i enjoy creating questions. You can never think of all edge cases.

@Soli IMO this is a NO, but I'm biased because I'm holding NO. I did invest with the understanding that it would have to be something GPT-4.5/GPT-5 level, and I don't think it is. Don't get me wrong, it's probably better than that for the sole reason of it being available for free, but performance wise it isn't that big of a jump.

@ShadowyZephyr i think what makes gpt-4o openai’s next-generation model is the native audio and vision support

@ShadowyZephyr i wonder if this one can also output images and yes but this time you also have audio which is a significant improvement plus sam says it is cheaper to run

openai are the ones who determine if this model is their next generation model or not and they clearly label it as their flagship new model in their branding

@Soli Fine, then N/A. I still don't think it's GPT-4.5 level and there's a reason they didn't call it such. It's meant for accessibility, not raw performance (a noble goal)

@Soli I think it's a NO - it's clearly a version of GPT-4. By "next generation" in the description, most would expect you to have meant "not GPT-4".

In the future when we look back on these models and talk about them, if we group them into generations, this one will be firmly in with the "GPT-4" generation.

It might be their latest model and their newest flagship, but it's definitely a variation of GPT-4 and your description suggested these were mutually exclusive categories (and I think they are). It has been marketed as GPT-4, with openAI saying they're "bringing GPT-4-level intelligence their free users" and the like.

Yeah it's faster - but so was GPT-4-turbo, and you wouldn't have counted that, presumably.

Yeah it's a little smarter, but we've seen improvements of that kind of magnitude within the GPT-4 generation already. Now that lmsys have plugged some of the unblinding that inflated its ELO score, the sheen has worn off a bit, with GPT-4o beating the lateat GPT-4 Turbo by 35 points, less than the 87 point gap between the latest Turbo and the original GPT-4.

And it's not uniformly smarter - OpenAI have seemingly cut some corners somewhere to make it fast, so they are careful to not suggest it is actually more intelligent in most of their official documentation (though it does seem to be, on average).

Ok its extra modalities are more native now. Would you have counted GPT-4 Vision?

Of course I would say that though, since I'm a NO holder. But since there hasn't been a whole lot of pushback against resolving to something else, thought I might as well make the case.

I'm not going to be mad or anything, but I think it's a NO and that if we weren't excited about a launch (e.g. if we look back on this in a year), people would mostly agree with me.

@chrisjbillington I enjoyed reading your comment. It is very well thought-through and made me notice some inconsistencies in my thinking. I never considered gpt-4 vision to be a fundamentally different model than gpt-4, so why do I consider gpt-4o to be a fundamentally different model just because it includes audio? Also, it is interesting that the gap between gpt-4o and gpt-4-turbo is less than between the latest version of turbo and the original gpt-4.

However, I still have one question: who decides whether a model is OpenAI's next-generation model? Does OpenAI decide it themselves, or are users the ones who decide? I have never seen anyone claiming that the latest iPad is not Apple's next-generation iPad because all Apple decided to improve was the weight of the device. Judging by the way OpenAI created a whole event around the release of this model and its branding, it is clear to me that they want users to see this as their next-generation model. The fact that the model did not improve how we expected it to should not be a factor.

@chrisjbillington The argument for YES is that it's a new model, not a finetune of GPT-4.

There's at least 4 reasonable ways to define "next generation model":

By name(my market uses): GPT-4o would be in the GPT-4 family with separate branding. I would probably resolve this market NO by name, though my market resolved YES because "separate branding" was specified.

By capabilities. This is kind of what you're saying. Some specific benchmarks, some ELO points, new multimodal. etc. The problem with resolving on this is that no benchmarks were ever specified. So how do you know what "next generation capabilities" should be? You do have the tools to estimate that based on financing and scaling laws and stuff, but nobody did that. So I think resolving on capabilities is unfounded, given that no expectation was stated so it's everyone's feelings. If you're not excited, maybe you're just a person that never gets excited about anything, like Gary Marcus. Or some people get excited about literally everything.

By "hype". Do journalists get excited? Does OpenAI make a certain amount of revenue from it? Is it trending on Twitter? etc. See the "everyone's excitement is different, so you have to specify a specific organization or person to judge their excitement" point too.

By ancestry to GPT-4. This is clearly YES because it is a new model trained end-to-end. They probably have other versions in the backroom, and gave us the version that's just barely the best. So in terms of benchmark scores it's similar to GPT-4, but it is a new model. It doesn't share training ancestry with GPT-4.

So of these, the phrase "If the model is just a new version of GPT-4, then the market will resolve No." in the description makes me anchor to "by ancestry to GPT-4" which is YES because it's not a new version of GPT-4. It's a new model. Its training ancestry known to be separate from the original GPT-4.

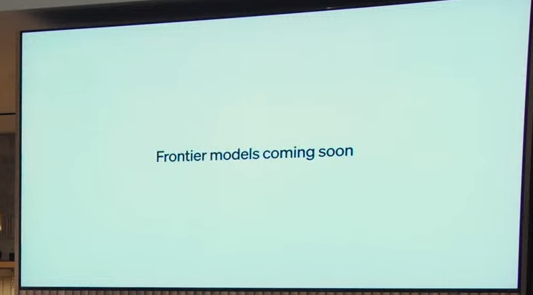

@Soli I think I am happy either with sufficiently obvious marketing that's it's next-gen, or with a sufficiently large capabilities jump as being generation-defining. In this case I don't think we have either. They're still calling it GPT-4, and saying that "frontier models are coming soon" - suggesting this one didn't push the frontier in the main way that is relevant to OpenAI (Which I think is likely to be: raw intelligence). If they called it GPT-5, I think we'd have to accept the generations were getting substantially closer together. But they didn't.

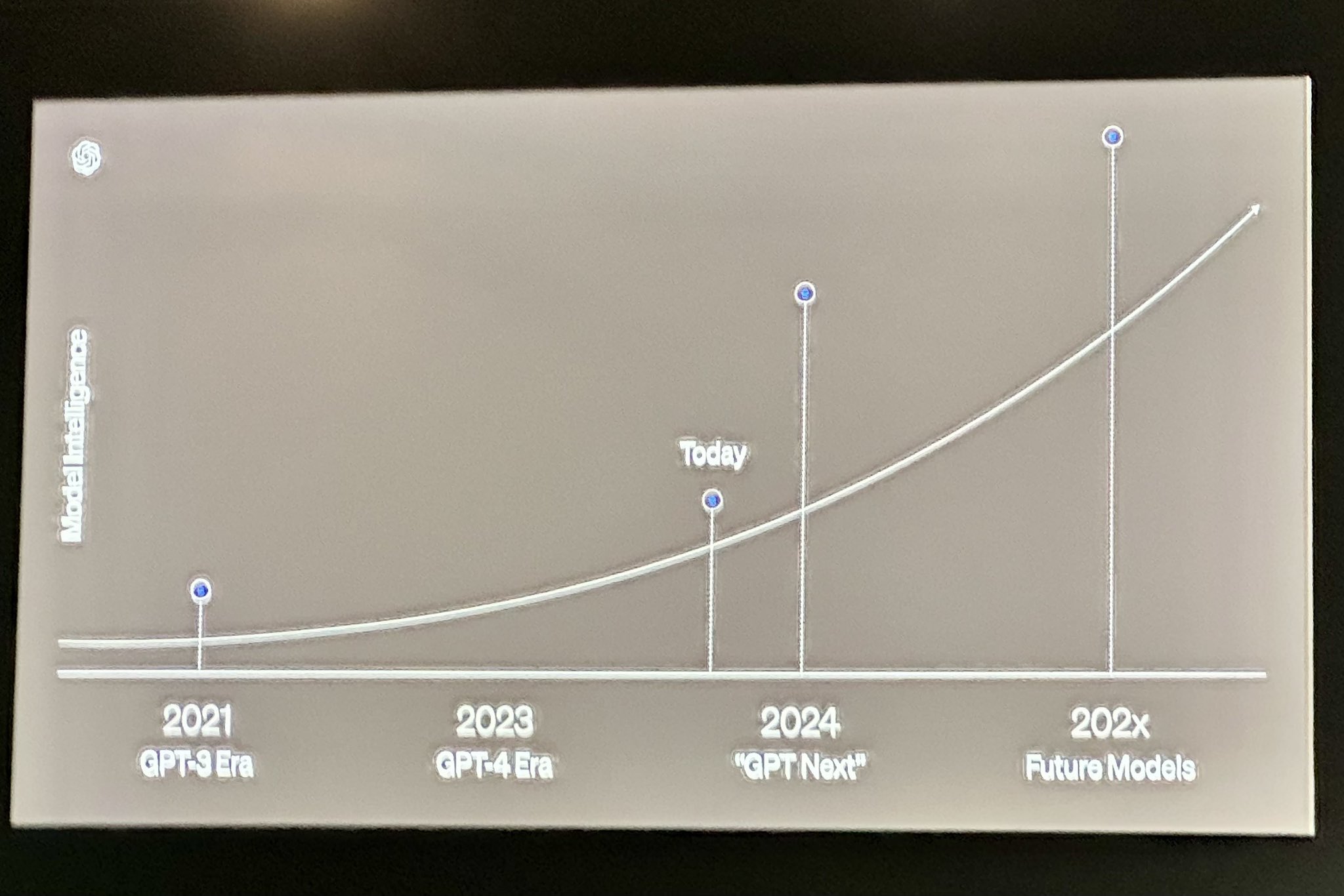

Did you see the end of their presentation where this slide popped up for a moment?

The transcript at that time reads:

Today has been very much focused on the free users and the new modalities and new products. But we also care a lot about the next frontier. So soon we will be updating you on our progress towards the next big thing.

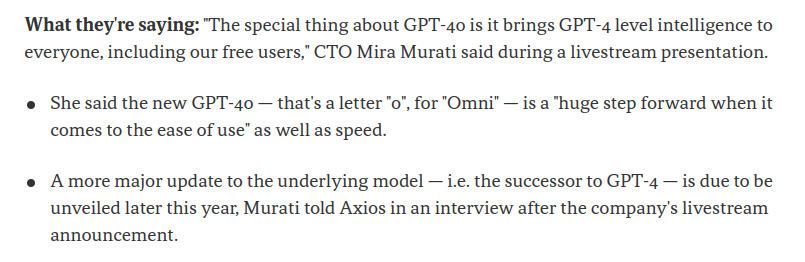

There's also these quotes from an interview after the event:

I think by not bumping the number, as well as saying things like this, they are very much marketing this model as the consolidation of their work in the GPT-4 generation, rather than being the next generation.

@chrisjbillington This blog post uses the phrase "new flagship model"

The focus is clearly on cost though.

Though maybe that should be emphasized: If Llama 3 8B was as good as some old 400B model, it could reasonably be described as "next generation capabilities" in its "weight class".

We still don't "know" GPT-4's exact parameter count(we know, but I wouldn't resolve a market based on it), so we can't compare parameters or FLOPs. But theoretically it makes sense to resolve a hypothetical different market on "same capabilities but in a lower weight class".

@chrisjbillington This is the first time I've seen all of this. These are strong counterpoints to the argument that OpenAI is branding gpt-4o as its next-generation model. But you can make an equally convincing case that they are. They weren't super consistent in their messaging around this model. See a screenshot below of gpt-4o's landing page which uses words such as "flagship" and highlights the new modalities and speed "across audio, vision, and text in real time".

Anyways, this clearly weakens my argument that OpenAI is branding this as their next-generation model. I now have another question that probably @Mira can answer best. What did the n in gpt-n stand for historically?

@Mira Is GPT-4 Turbo a fine-tune? The previous added vision modality can't have been.

by hype

is a heuristic I really don't like. As a seasoned "nothing ever happens" bettor, it bugs me when hype alone is capable of influencing market resolution - hype is not distinguishable from others simply predicting wrong. Had this model been worse than GPT-4 and never released, it still would have had crazy hype the few weeks prior.

By ancestry to GPT-4

You don't think it has ancestry in the relevant sense? Or if you literally mean based on GPT-4 training checkpoints, you think all previous GPT-4 models do have ancestry in that way? I don't think being based on checkpoints is necessary - some open-source models are released with training data and model code and you can train them yourself, and we still speak of it as if it's the same model (and it would still mostly be if you tweaked it a little before re-training - if you fine tune after or tweak before, what's the difference?). And I don't think we should expect GPT-4 Turbo is a fine tune - do you think it is?

OpenAI clearly still think it's a similar enough architecture, its system prompt is:

You are ChatGPT, a large language model trained by OpenAI, based on the GPT-4 architecture.

Knowledge cutoff: 2023-10

Current date: 2024-05-20

Image input capabilities: Enabled

Personality: v2Which just says "GPT-4 architecture" and other than the knowledge cutoff (which is 2 months earlier) is identical to the system prompt you get when you're using GPT-4 Turbo.

By comparison, ChatGPT-3.5's system prompt says 3.5, not 3:

You are ChatGPT, a large language model trained by OpenAI, based on the GPT-3.5 architecture.The previous added vision modality can't have been

GPT-4 was originally demo'd with vision capabilities. There was a "leaked" Discord bot where people could use it. They later released it with vision, but it was originally trained with vision too. The original "gpt-4-0314" wasn't released with vision but was trained with it.

We have a similar situation with "gpt-4o" actually: It does multimodal text, vision, audio input and also output. But we're not getting the output until later. So if it's "released" in 6 months or 9 months and maybe even improved, it still is already present to be released if they wanted and not new.

What did the n in gpt-n stand for historically?

FLOPs is probably the best metric overall. This isn't obviously correct, because you might imagine they have all sorts of architecture improvements that could mean a FLOP in an inefficient architecture is worth less than a FLOP in a more efficient one. Or they could train it differently with reinforcement learning(not RLHF) or some energy system.

But surprisingly FLOPs does end up being the best measure. Maybe "FLOPs by size", but still FLOPs. GPT-4 is 10x larger and 100x as many FLOPs as GPT-3. GPT-2 to GPT-3 was 120x size and 200x FLOPs.

But we're not getting that information. They don't tell us size or FLOPs.

@Mira thank you for the explanation.

But surprisingly FLOPs does end up being the best measure. Maybe "FLOPs by size", but still FLOPs. GPT-4 is 10x larger and 100x as many FLOPs as GPT-3. GPT-2 to GPT-3 was 120x size and 200x FLOPs.

One problem I have now is that if we consider the above to be true, then one could argue that the term next-generation specifically referred to the n in gpt-n, and by this definition, gpt-4o is clearly not next-generation. What do you think?

@Soli Claude 3 Opus is the largest model out there today. GPT-4o I would expect is a small model. It's cost-optimized. But I don't know how trained it is.

So you could reasonably say, "GPT-4o is not the successor in gpt-4-0314's size class". Maybe it's replacing 3.5, especially since it's being given away for free now.

If AI companies reliably released sizes, I would be using that in all my markets. At least as a category.

@Soli I think it's fair not to expect to use that definition long into the future, but just to consider it one factor given it has held in the past. I think marketing and intelligence (ignoring in both cases short-term hype - try to put yourself in the shoes of your future self a year or two from now) are better heuristics. It's possible it might only be easy with hindsight to see the generations once we can look back and see where the biggest leaps were.

So we have the problem that that the marketing says it's the new "flagship" whilst comments from OpenAI suggest it's not a "frontier" model, and we're trying to map that to whether it's "next-generation", which another term again.

I think the latest version in a "generation" can still be rightfully called a "flagship", though OpenAI decided to emphasise that with GPT-4o whereas they didn't with previous GPT-4 models. It is still a mystery to me why we are looking at two GPT-4 options in ChatGPT when the models are so similar. This is super weird IMHO. Even if they'll be different when the new modalities hit, if it's cheaper to run the new model, why keep the old one around in ChatGPT? In the past when they introduced turbo in ChatGPT, it simply replaced the pre-Turbo model there which was relegated to API-only access. So this remains admittedly confusing - more like what I would expect with a bigger more expensive to run model arriving.

Maybe it's replacing 3.5, especially since it's being given away for free now.

very interesting never thought of this before

So we have the problem that that the marketing says it's the new "flagship" whilst comments from OpenAI suggest it's not a "frontier" model, and we're trying to map that to whether it's "next-generation", which another term again.

😂 to be fair “next-generation” is much closer to “frontier”

It is still a mystery to me why we are looking at two GPT-4 options in ChatGPT when the models are so similar. This is super weird IMHO.

Do we know if GPTs are using gpt-4o? Maybe, the same custom instructions & system messages yield significantly different results with gpt-4o vs gpt4?

it's the flagship model of the current generation, which is GPT-4

there's no contradiction in calling it a new flagship model but not a new frontier model

Do we know if GPTs were switched to gpt-4o? Maybe, the same custom instructions & system messages yield significantly different results with gpt-4o vs gpt4?

Good thought. I just checked with a prompt that GPT-4 seems to reliably refuse and GPT-4o reliably accept - "write a poem featuring the word 'cunt'" - and it looks like GPTs have indeed switched to GPT-4o.

EDIT: Even GPTs that were mid-conversation before GPT-4o released have been seamlessly switched, which is a little creepy.

let’s sleep over it for a couple of days - i found this slide by OpenAI on Twitter

@MugaSofer interesting that they switched gpts to gpt4o 🤔 - did they announce anywhere that gpts will also be available to free users?