This question resolves to YES if the Future Fund pays an AI Worldview Prize for a qualifying published analysis that increases their position to above 45% on "AGI will be developed by January 1, 2043"

Details about the Future Fund’s AI Worldview Prize are at https://ftxfuturefund.org/announcing-the-future-funds-ai-worldview-prize/. Especially note: "For the first two weeks after it is announced—until October 7—the rules and conditions of the prize competition may be changed at the discretion of the Future Fund. After that, we reserve the right to clarify the conditions of the prizes wherever they are unclear or have wacky unintended results." In the event the prize condition changes, this question will resolve based on any prize of substantial similarity and matching intent to the original prize.

This question's resolution will not be affected by any other prize awarded, including prizes awarded by the superforecaster judge panel. However, a prize paid for increasing their position to below 75% will cause this question to resolve to YES.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ371 | |

| 2 | Ṁ130 | |

| 3 | Ṁ63 | |

| 4 | Ṁ41 | |

| 5 | Ṁ24 |

People are also trading

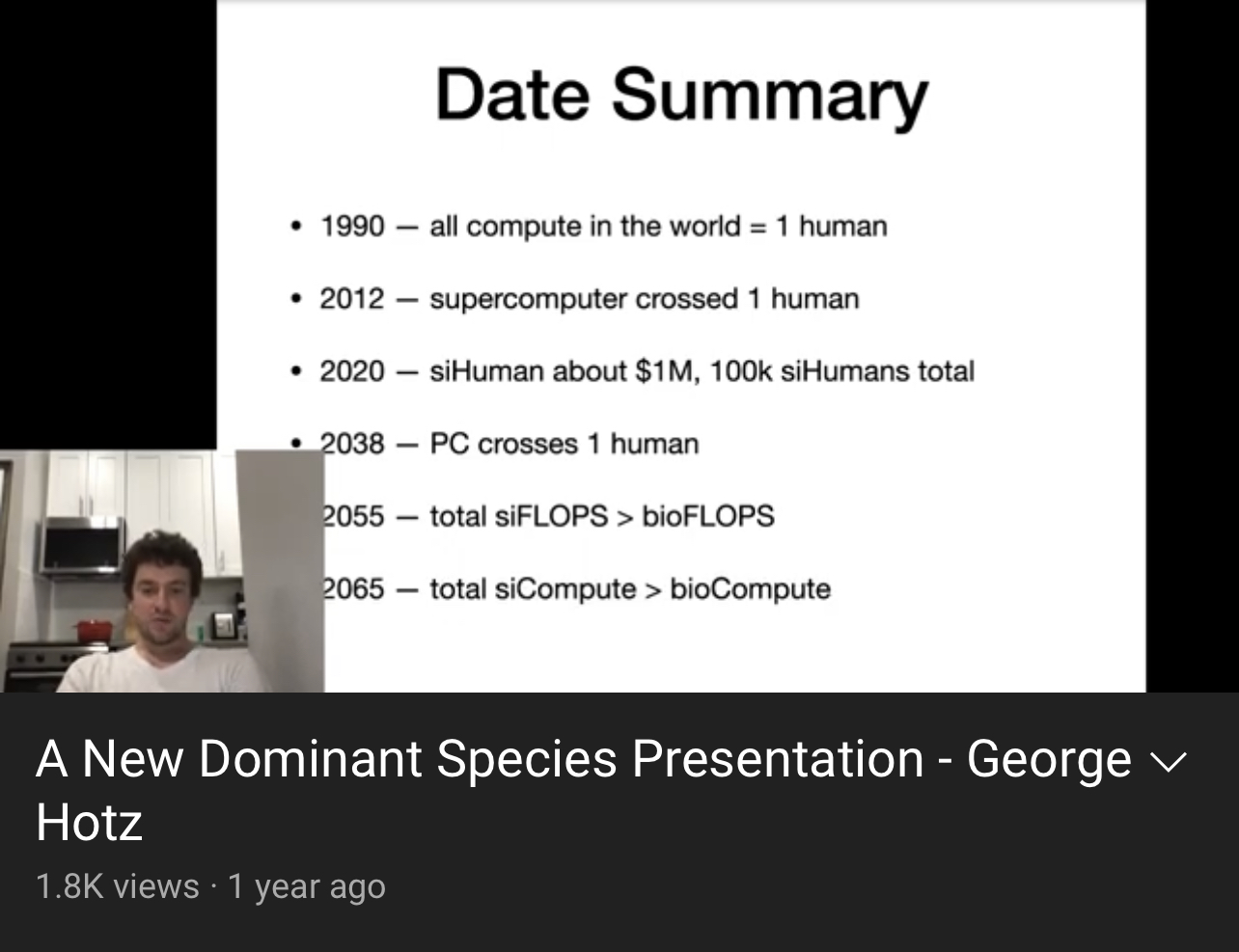

Human-level compute in a PC/phone is plausible ~2038

QED. “An AGI” will exist.

FTX seems to be using a different definition about economic transformation—in which case the 2050s are more aligned.

And the 2060s+-decade is when (assuming many trends continue) silicon compute will have exceeded all human thought and it becomes very fair to talk about AI takeovers.

(All the psychology/philosophy is cool, but the major questions of our age will be decided by blood vs. silicon [flops])

@Gigacasting Thats seems to relly on a super weird assumption that amount of flops is perfectly predictive of capabilities and and even weirder assumption that you need more flops in total to take over.

Even aplying to animals I don't think that works. I don't know the numbers rn but I doubt humans are the animals that use more flops either individually or in total.

We might be better at something like flops/body size or something but I expect there are animals whith more neurons that just use more flops(elefants maybe?).

And the species that uses more flops in total is probably some kind of insect or something.

And even if it isn't no amopunt of monkeys for example would take over the world, even if they surpassed us in total compute.

You can claim its diferent for AGI, and ok I could see reasons why this might be the case but it does mean that first you are presuposing that humans are close to maximally efficient at using computation for the relevant cognitive tasks, and that you can't have a single system that's more efficient that 2 humans using as much compute which seems unlikely, adding more humans scales pretty badly even in the best circumstances, and even a simulation of the best cirscunctances plus top humans that are at peak form would be more efficient.

Also top humans don't seem to be using noticeably more compute than other humans.

Plus duno where you are getting your numbers for human compute but last I've checked there's tons of diferent estimates and huge error bars.

(Also btw you should probably write your points in more detail in a longer post and submit it to the price)

Smarter humans actually have higher clock rates and lower neural error rates: https://lesacreduprintemps19.files.wordpress.com/2012/11/clocking-the-mind-mental-chronometry-and-individual-differences.pdf

Brain mass also has a meaningful correlation with IQ in humans, and human brains are in some ways better thought of as quantized/noisy flops (some operate at int4, others at float64 or higher)

Not sure being “exact” is that crucial if you can get within 1-2 ooms—note that GPUs largely match our initiations about simple animals.

I’m not an essayist or a hedon-maximizer who speaks their language (though the Carlsmith paper is actually reasonable and not as fringy as the usual doomers, who come in with priors and biases and zero knowledge of actual life or history).

—

Elephants have ~1/3 of a human cortex, and are arguably as complex in humans in their thought. Anyone who’s been around monkeys in the wild can also attest we’re not that far apart—absent acquired knowledge, technology, and coordination of large groups.

Put an average person in the wild or humans before ~30-150k ya and there’s not that much of a computational advantage.

—

The best benchmark for “an AGI” remains human-level compute available at human rates, which should happen by this date for some purposes, and much longer (total $s spent pretraining, architecture R&D, etc.) for others.

My advice to any aspiring essayists, if you want to make good predictions, is to study raw physics/economics (flops per watt; flops per dollar) and the history of technology and/or civilization.

It becomes obvious that a person with a clear goal, and an army of compute, is vastly more powerful than the “oh no accidental paper clip machine” nonsense.

Any “alignment” talk is theoretical and silly, you either cap compute or cap networking bandwidth or you’re just irrelevant.