Resolves after Ilya Sutskever makes his next public comment relating to AI Risk, AI Doom, P(Doom), the feasibility of AI Alignment with current methods, or says something like "I've found myself increasingly agreeing/disagreeing with Eliezer Yudkowsky/doomers/AI X-Risk people."

Ilya has been, up until now, not very vocal on how much he expects Doom to be the outcome for humanity. There are some rumors about him obsessing over this question, but in public appearances he has seemed rather neutral to positive about the prospect of this tech turning out well for humanity.

From an interview with Dwarkesh Patel:

Dwarkesh: You mentioned a few of the paths toward alignment earlier, what is the one you think is most promising at this point?

Ilya: I think that it will be a combination. I really think that you will not want to have just one approach. People want to have a combination of approaches. Where you spend a lot of compute adversarially to find any mismatch between the behavior you want it to teach and the behavior that it exhibits.We look into the neural net using another neural net to understand how it operates on the inside. All of them will be necessary. Every approach like this reduces the probability of misalignment. And you also want to be in a world where your degree of alignment keeps increasing faster than the capability of the models.

Resolves YES if Ilya says "alignment is not going to keep up with capabilities on our current trajectory."

Resolves NO if Ilya reemerges in public and continues backing Superalignment or remains positive about the future of humanity on its current course.

I am extremely unhappy with how subjective this question appears to be in its current form. Unfortunately, I cannot think of a sufficient list of criteria to cover every edge case.

If need be, some fuzzy edge cases will be resolved in polls.

In service of making this as unambiguous as possible, this market will not resolve the same week Ilya returns to the public scene. Expect in a typical future for a week or more to pass between Ilya returning to the public sphere and the question resolving one way or another. Expect longer if Ilya returns but glomorizes/fence-sits/remains vague about AI X-Risk/probabilities of good vs bad outcomes from AI.

In his interview with Dwarkesh, Ilya never once mentioned "existential risk" or "extinction" or "doom" or even "extremely bad outcomes." The above quote is the only place in the interview where "misalignment" was mentioned by that name.

Therefore my current take is that this resolves NO if Ilya reemerges and continues to talk about what the world will be like for humans after AGI, or making reassuring noises about how much work is going into alignment, or talking more about how a whole bag of tricks and some AI assistance is all we need.

Resolves YES if Ilya comes back and says "we need to pause" or "we need an international moratorium" or "AI Doom is the default outcome unless we do a bunch of stuff differently" or "I am now more afraid for the continued existence of the species" or "much more work needs to be done on alignment than I previously expected, and I don't think we're on track for a good outcome" or "my P(Doom) is >50%" or "my P(Doom) has gotten way higher" or "I am expecting this all not to end up going well for humanity unless we drastically change course."

If there's an article like "Ilya Sutskever follows Geoff Hinton in warning of dangers of AI" - this market will almost certainly resolve YES.

If there's an article like "So long AI Doomers, Ilya Sutskever says Alignment is solved" - then this market will almost certainly resolve NO.

My basic take on why this is even a possibility is that I have gotten the impression that Ilya thinks about this question more than the average leading figure in the field - and he seems to have something like the necessary clarity of thought to arrive at the conclusion that we're in a very pessimistic situation. He has made a proposal for how to align AI, and when has likely walked around for over a year thinking about if he expects it to actually work.

Will that yield a different frame of mind where Doom is taken more seriously and spoken of openly? Well, one can hope, but I'd prefer a well calibrated probability.

🏅 Top traders

| # | Name | Total profit |

|---|---|---|

| 1 | Ṁ39 | |

| 2 | Ṁ16 | |

| 3 | Ṁ10 | |

| 4 | Ṁ8 | |

| 5 | Ṁ7 |

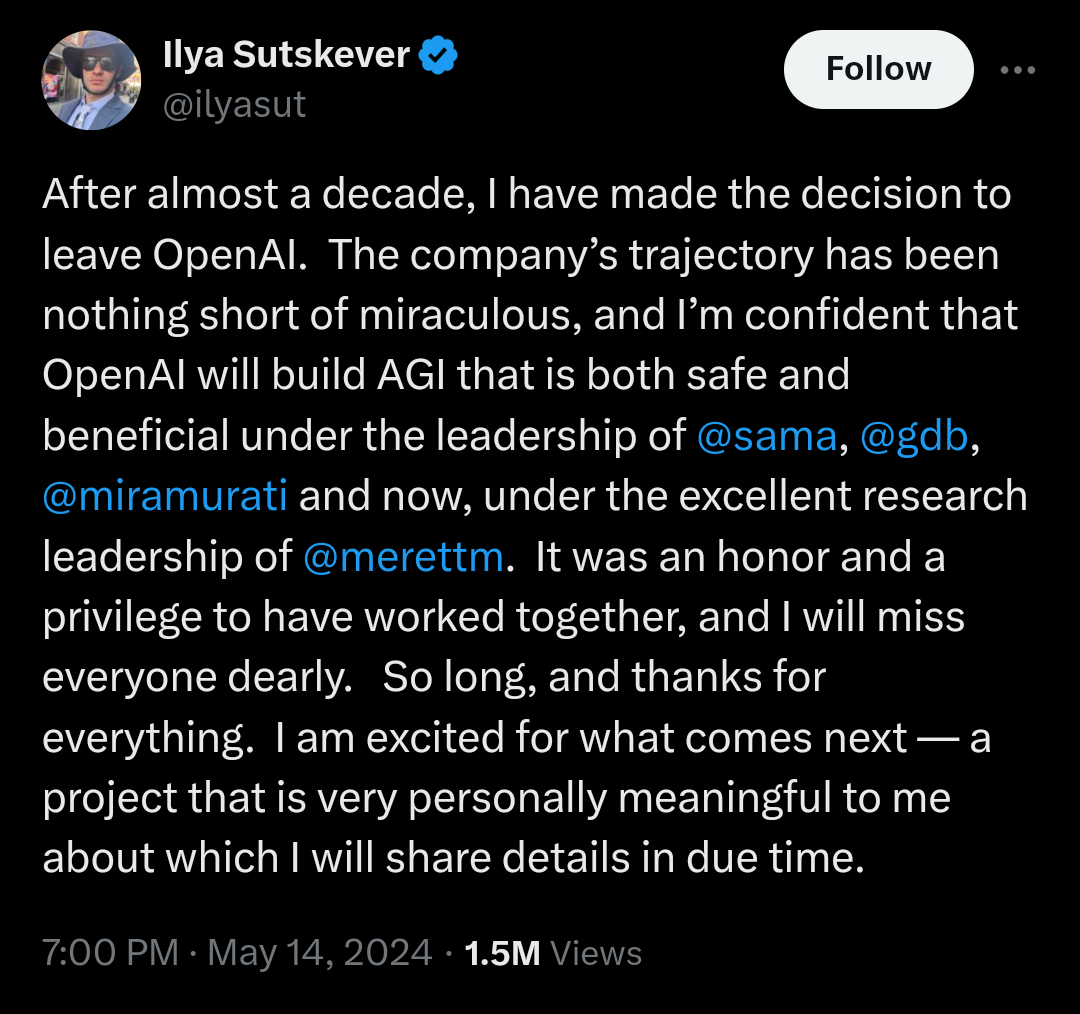

@traders Whoops missed the Sutskever tweet for a couple of hours. Looks like the answer is "no."

Tweet:

[...] and I am confident that OpenAI will build AGI that is both safe and beneficial [...]

I am fairly sure a doomer would never say this, nor would any sane doomers say that this voice is joined with theirs.

I don't know why he said it. Probably to dissuade people like me from speculating about whether he has changed his mind about that part. Still, that sentence is going to be burned in my memory for a while - it just sounds so weird to me.

For the "no" bets, enjoy your mana :)

I did say to expect this not to resolve within a week of return - however, unless that was taken as a commitment by anyone who feels burned by the quick resolution, I think this should stand as it is.

I was covering for him returning without really saying one way or another whether he was optimistic. This tweet, screenshotted above, is - if I'm not mistaken - the most definitive thing he's ever said about his expectations for the result of AI. I was expecting to need to wait for another interview or a bunch of articles. I expected to be less instantly certain about his tone.

Apparently not! He made this super easy.

Unless waiting a week was a good idea, and actually tweeting this wasunder durreas/part of a contact, and next week he goes on a news interview in another country and breaches all his NDAs and claims that the international community needs to stop his former collegues.

To be clear, I wouldn't bet on that being the true story even in a market that was at 1%.

Still, it would be funny.