I did a straw poll of artist friends who objected to stable diffusion, and most said their main issue was it being trained on copyrighted images.

This market resolves YES, if any major generative art AI is released before 2024 that is trained exclusively on public domain and Creative Commons licensed images (or at least a data set that is credibly 99% such images).

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ3,626 | |

| 2 | Ṁ1,104 | |

| 3 | Ṁ582 | |

| 4 | Ṁ313 | |

| 5 | Ṁ197 |

People are also trading

https://huggingface.co/Mitsua/mitsua-diffusion-cc0 was trained on public-domain data and released in december 2022 (with a successor model in march 2023), though it doesn't quite have 67% as many params as stable diffusion and the quality is bleh (and it's probably too late anyway)

Resolution please @LarsDoucet

Doesn't look like Common Canvas discussed below was made available, at least not for "for normal schmoes" (and perhaps at all, I can't tell).

https://huggingface.co/papers/2310.16825

I trust that it's trained on public domain and CC images only, so resolution will require it to be actually released (the GitHub page linked to in the paper says "coming soon"), and for it to be "major", whatever that was intended to mean. Perhaps that was just to rule out small and useless models.

@LarsDoucet could you rule on what "released" means? A product already hosted somewhere people can use, or is a git repository you could clone and run (if you have an NVIDIA GPU or whatever's needed) sufficient?

@chrisjbillington Released means usable by normal schmoes; it doesn’t have to be open source. So a public domain trained version of mid journey and DallE would count. I’m otherwise occupied at the moment but will rule on this eventually

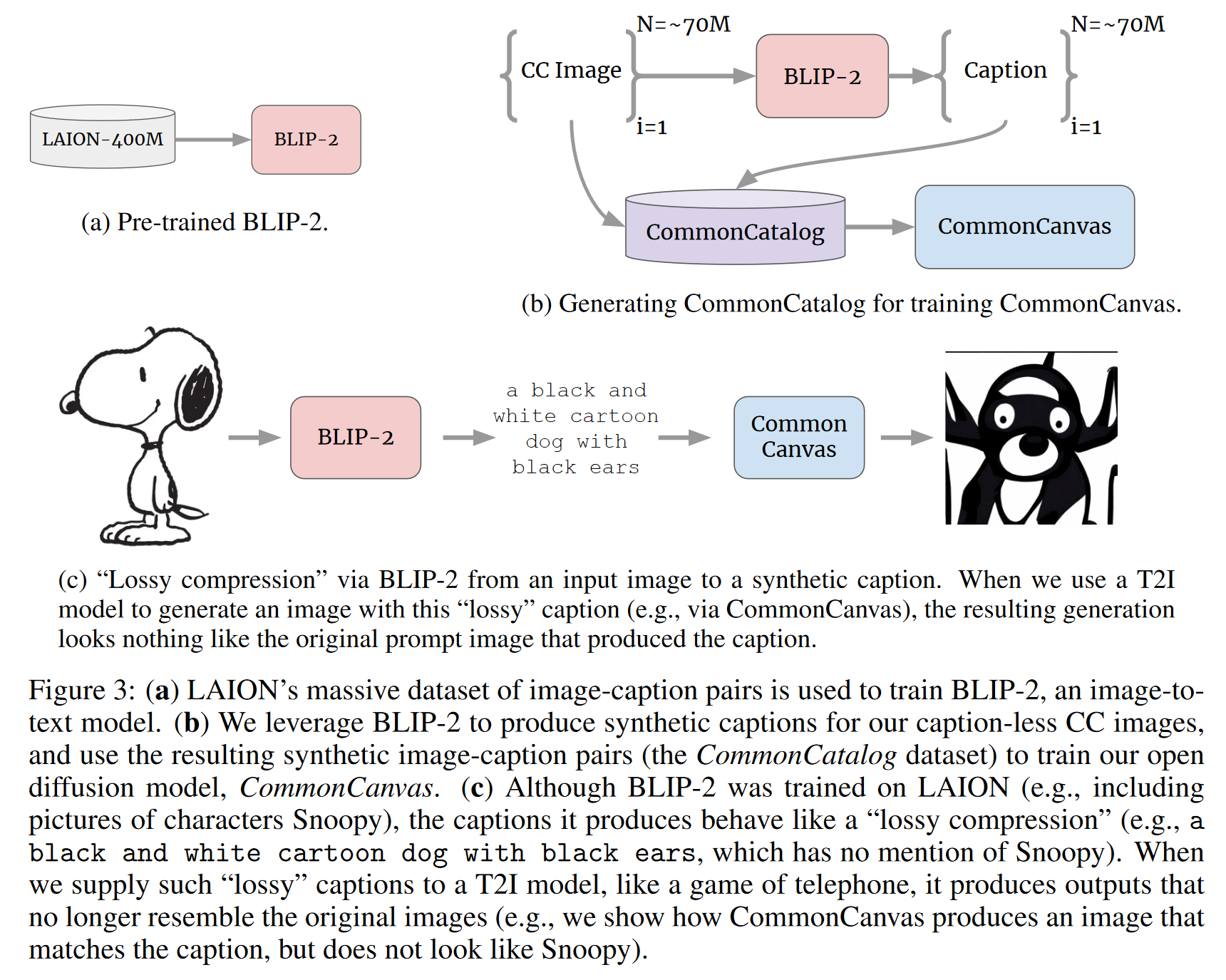

@LarsDoucet Would this model count as only using creative commons imagery when the model uses BLIP-2 to generate accurate captions to pair with the cc images used for training (and BLIP-2 is trained on LAION 400M)?

@parkerfriedland Why not? After all it is "trained exclusively on public domain and Creative Commons licensed images". Images used to get captions aren't part of the training set, are they?

@IsaacLiu Aren't those proprietary images that Adobe itself owns or licenses through it's stock photography databse?

@SamuelMillerick Parameter size and/or subjective quality of output. If either metric is 67% as big as or as good as DALL-E2 or Stablediffusion, in my sole subjective but trying to be fair opinion, I’ll allow it.

@SamuelMillerick Also the most likely way this happens is someone retrains stablediffusion but swaps out LAION, assuming they can get enough compute together to train