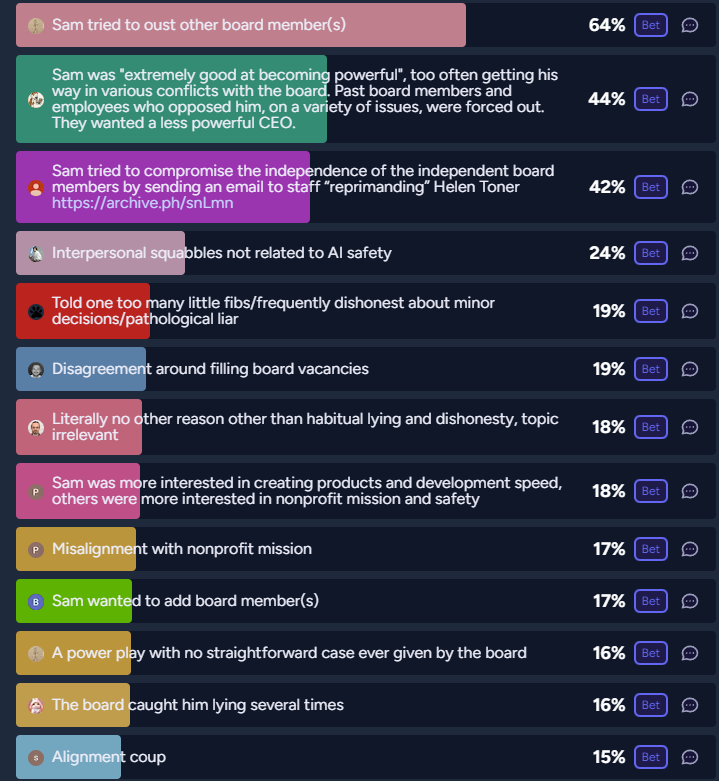

This is a derivative market of the main market on this subject: /sophiawisdom/why-was-sam-altman-fired

This market is meant to inform trading on that market by providing a gauge of public opinion.

This market will close on December 16th, and I will then create a separate Manifold Poll for each option, asking if it was a "significant factor" in Sam Altman's firing. The polls will run for several days to allow everyone time to respond to them.

The answers in this market will resolve based on the polling results, unless I believe that a poll has been manipulated by people who are obviously voting dishonestly. If this occurs, I may resolve an option by creating a private poll that only moderators can answer.

All polls will have a "see results" option so that users are not forced to vote in a poll if they are unsure how to vote but still want to see the full results.

All answers here are taken from the main market. If you want to submit additional answers, they must also be submissions on the main market. I also ask that you not submit any answers which are extremely low % on the main market, so that we can keep this market easier to navigate. I will N/A any submissions that I view as low-quality even if they were not N/A-ed in the original market.

I have also tried to not include too many duplicate options, though there can be reasonable disagreement about what counts as a duplicate. I may N/A answers that I think are too similiar to other answers, such that the polling on them would likely be identical. For example, I don't think we need two answers about fundamental disagreements about OpenAI's safety approach.

These resolution rules should be considered to be in "Draft Form" upon creation, and I am open to modifying them while remaining within the spirit of the question.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ352 | |

| 2 | Ṁ106 | |

| 3 | Ṁ34 | |

| 4 | Ṁ21 | |

| 5 | Ṁ13 |

People are also trading

Alright, I've got the polls up for every question, and I've put them all together in this dashboard!

I'll also put them all together here in a single block:

Eager to hear if anyone has hot takes for voting yes on a low % option or no on a high % option!

@Joshua Man I really wish Manifold would let me put all these polls together into a mega-poll with approval voting.

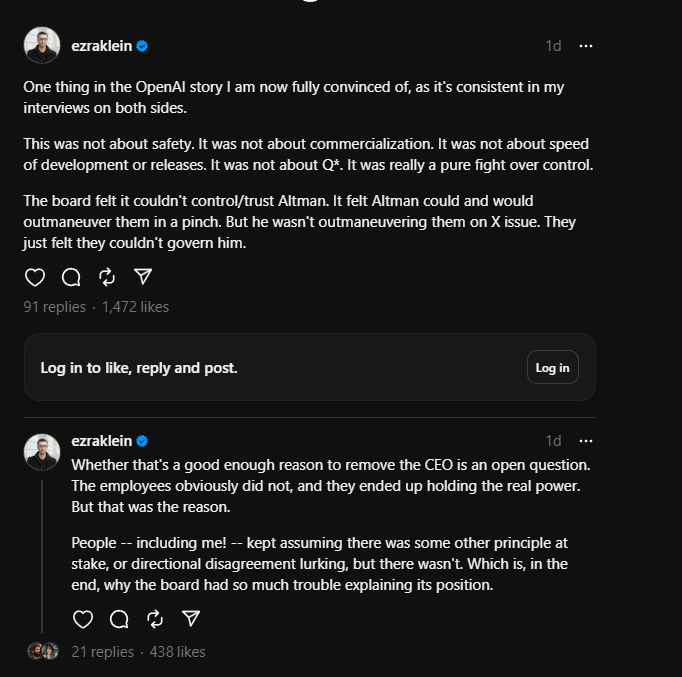

@TheBayesian Yeah for lack of more concrete options, I might actually end up just voting purely based on Ezra because I generally trust him to be a good judge of complicated situations like this.

@Joshua This explanation also probably postdicts the events better than most other hypotheses, and is simple and realistic, etc

I'm finding it very hard to model what a board member believing they can't trust/control Sam looks like that doesn't ultimately cash out as safety concerns. Like isn't the main reason you care about trusting or controlling your CEO is so you can make sure you're happy that the mission of safe AGI is in good hands? Or maybe another way to put it is that it seems like a contradiction to say you don't have any concerns about how safety is being done, but also that you don't trust the person in charge of doing it?

Edit: After Toner keeps explicitly saying on the reocrd it wasn't about safety and was just about trust/honesty, I no longer plan on voting yes on any safety answers.

So far, the options here are generally much higher than in the main market!

original market:

vs here: