I have no inside information but a wild guess:

Llama 3 WAS gonna be a dense model.

Yet, with the success release of Mixtral and Deepseek MoE, the whole team completely changed their model design, abandoned the model that is about to train, in progress, or even finished pretrained, and instead followed the new MoE approach.

This market will bet on my speculations. I will try to make the resolution rule as clear as possible, but since it will rely on some sort of inside information, some ambiguity will inevitably remain.

If the next-gen model from Meta is named differently, then it is considered Llama 3 if:

It has text language understanding/generation capability

It show (statistically) significant improvement over Llama 2 on LMSYS Arena Leaderboard (3* max(std dv of llama2-70b-chat, std dv of new model's strongest version))

Some Certain cases:

If Llama 3 is not MoE, the market will resolve to "NO"

Else, if anyone in meta stated that they was gonna do dense training, but changed idea, the market will resolve to "YES"

If two independent news sources confirmed my story, resolve to "YES"

I am setting a closing time of EOY 2025, since I believe Llama 3 will be released before this time. No release before that will resolve "NA".

In all the other cases, I will be very cautious on deciding the resolution based on public and expert opinions, some inside news, etc. Keep that in mind when you bet.

Note that another possibility is that Meta no longer releases the weight for Llama 3, and does not tell people if they used MoE.

If I cannot figure it out by March 01, 2026, I will resolve the market to "NA"

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ219 | |

| 2 | Ṁ151 | |

| 3 | Ṁ91 | |

| 4 | Ṁ12 | |

| 5 | Ṁ6 |

People are also trading

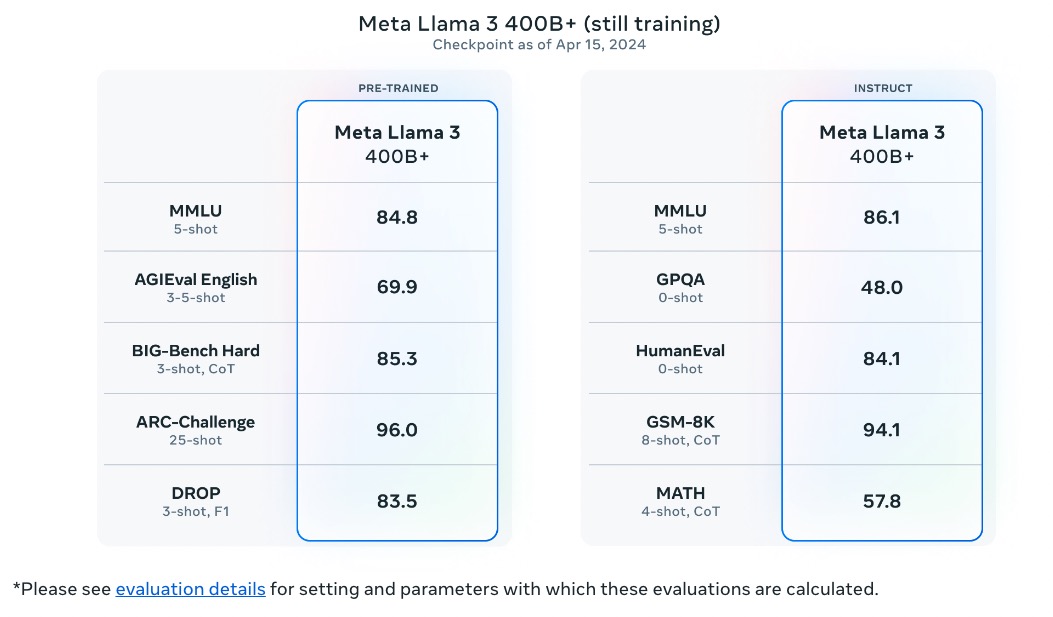

Not gonna resolve until we know if this 400B+ is out.

The market will resolve YES if this 400B+ is MoE, otherwise NO

@HanchiSun I am not conditioning on Llama 3 being MoE, but requiring it for the market to resolve yes