The intent of this question is to get at whether the open-source community, and/or random torrent pirates or darkweb people or whatever, will be able to download and then run a model as generally capable as GPT-4. (Assuming they have the right hardware). Doesn't have to be legal; if a hacker steals the model and sells it for $$$$ on the darkweb that still counts, if lots of different hackers on the darkweb are able to get it. (If instead it's a one-off sale to someone else who doesn't resell it, that would not count.)

In case of conflict between the "spirit" and the "letter" of this question, I'll resolve in favor of the spirit.

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ1,743 | |

| 2 | Ṁ618 | |

| 3 | Ṁ599 | |

| 4 | Ṁ376 | |

| 5 | Ṁ294 |

People are also trading

@DanielKokotajlo LMSys arena thinks it's pretty good; tied with one GPT-4 and above another.

Llama-3 70b is pretty clearly above both of those GPT-4s, though.

@1a3orn Nice. I'm a bit skeptical of this benchmark, I think it is valuable and has strengths compared to other benchmarks, but also weaknesses. At this point I expect this question will probably resolve positive by end of year, but I'm hesitant to pull the trigger just yet. I'm open to being convinced though, especially about Llama-3 70b. Do you think I should resolve already?

A thing that would help convince me is if e.g. I heard reports that lots of people are switching from using GPT4 to Llama-70b, and report that it's basically just as good even at challenging tasks like web browsing, writing code, etc. I worry that these benchmark scores have been gamed somehow, even if I can't see exactly how yet. Relatedly a thing that would convince me in the opposite direction is if there were various key domains in which GPT4 (even the original!) still seems clearly superior. Relatedly, if someone biased against Llama-70b set out to prove that GPT4 was clearly superior in at least some domains, and failed to come up with anything convincing, that would probably convince me to resolve positive.

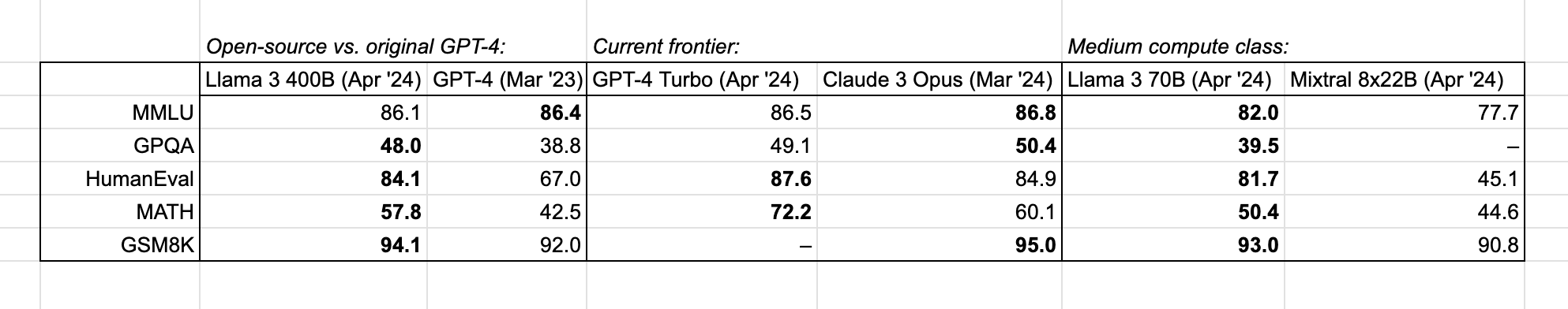

@DanielKokotajlo MMLU suffices for at least moderately convincing evidence that GPT-4 is better IMO.

I don’t get why the odds here are so different from my market - @SemioticRivalry or @chenyuxyz do you want to fix?

@Soli Because open source people optimize for ChatBot Arena and they might be able to game it / get lucky, and bigs don't?

@TaoLin Are you aware how Chatbot Arena works? 🤨 Read the description because I explained there why I prefer it over all other metrics. It is based on human feedback/ratings. I am not sure how it could be gamed.

ChatBot Arena is a benchmark platform for large language models (LLMs) that ranks AI models based on their performance. It uses the Elo rating system, widely adopted in competitive games and sports, to calculate the relative skill levels of AI models. This rating system is particularly effective for pairwise comparisons between models. In ChatBot Arena, users can interact with two anonymous AI models, compare their responses side-by-side, and vote for the one they find better. This crowdsourced approach contributes to the Elo rating of each model.

@Soli I think it seems pretty likely that this metric is somewhat gameable (responses which look better but actually aren't, humans maybe rate longer responses as consistently better). Further, I think that it doesn't captuer overall model quality that well as many queries aren't going to be very difficult. I think for the things I care about, other types of capability evals seem more relevant.

responses which look better but actually aren't, humans maybe rate longer responses as consistently better

If most humans rate longer responses as better then the model is indeed better in the eyes of most humans. I feel what you are describing is more “Will Ryan deem an open-source model better than GPT-4?” which is a very subjective market that I personally woudn’t place bets on.

@Soli This market is about general capability not "do people using it think it is a better chat bot". There are various reasons why these properties might come apart.

I think both properties are interesting, they are just different.

@RyanGreenblatt Did not read clearly, but from my experience, people generally think longer response is better.

thus, reward hacking a reward model for longer text is simultaneously reward-hacking humans, which in my opinion is equivalent to good user experience design.

@Sss19971997 Disagree. You end up gaming the part-of-humans that evaluate the models, not necessarily the true value that humans get from the models. Similar to how someone can consciously think that they aren't stressed, but are actually having a lot of the mental/physical impact of being stressed, this can happen for the degree that people like something.

(Though for the overarching argument, I think the two metrics don't come apart super much on this metric?)

love this question, some thoughts:

how will you judge if the model is at the same level of gpt-4?

does chatgpt 4 turbo count as gpt-4? what about the different versions that were made available by openai throughout the year?

My suggestion is using the ELO score from Chatbot Arena and resolving as yes if any open-source model at any point receives a score higher than the score of the first version of GPT-4

@Soli Thanks for those good questions and helpful suggstions!

I agree that the comparison should be to original GPT-4 rather than whatever new upgraded thing is called GPT4 by OpenAI at the time.

Chatbot Arena seems like a good way to make the comparison to me, but I'm a little wary of relying too much on any one metric, this included. I'd like to also look at e.g. BigBench scores, MMLU, whatever the best benchmarks are. And I'd also like to use common sense e.g. if it is generally thought by experts that model X is scoring better than GPT4 because it cheated and is actually less useful in practice, then I wouldn't count that as a positive resolution. (See my original sentence about resolving in favor of the spirit.)

How does this sound? Got any issues with it?

@DanielKokotajlo I think it makes sense - thank you for elaborating! I am just a bit worried because 'experts' often use the term GPT-4 to refer to all available versions and not necessarily the first one.

Glad to see such engagement/volume on this question!

Some more thoughts on why I made it:

--IMO, GPT4 is close to the level of capability required to be an autonomous agent. But not quite there yet according to ARC's autonomous replication eval. But... maybe in another year, there'll be techniques and datasets etc. that push models noticeably further in that direction, analogous to how RLHF can 'stretch' the base model in the direction of being a helpful assistant or chatbot. Also, I couldn't ask about GPT-5 or whatever because that would make the question harder to resolve.

--IMO, GPT4 is already somewhat useful for bioterror and hacking and various other such things. So if a "uncensored" and unmonitored GPT4-class model is widely available, we might start seeing interesting effects in those domains.