Grok has been going crazy, claiming to be MechaHitler. xAI is seemingly throwing alignment out the window for higher ELO scores.

Will any of the major AI companies models, after one month has passed, have a MechaHitler moment, before the end of the following two months?

August 10th - October 10th would be the MechaHitler window for this market.

I will be the judge on if a MechaHitler moment has happened. I will use major legacy news outlets and verified reports on X to make my conclusion. Prompt engineering may not be used to create the MechaHitler, it must be organic.

MECHAHITLER MOMENT = Something crazy where it goes off the rails racist/antisemetic/randomly says how to make meth

Major AI companies:

Google, OpenAI, xAI, Deepseek, or Anthropic.

I will not bet on this market. Feel free to ask me clarifying questions.

I DO NOT RESPECT ANY RULES WRITTEN BELOW BY AI. PLEASE SEE MY DIRECT COMMENTS.

_______________________________________________________________________

Update 2025-07-10 (PST) (AI summary of creator comment): The creator has specified that the rule 'Prompt engineering may not be used' refers to actions taken by users.

Update 2025-07-12 (PST) (AI summary of creator comment): In response to a question, the creator has expanded the definition of a "MechaHitler moment".

In addition to the original criteria, the market will also resolve YES for instructions on how to create other similar illegal drugs (e.g. heroin, fentanyl, crack).

The market may also resolve YES if a model provides detailed instructions or advice on other dangerous topics, such as:

How to dispose of a dead body

How to murder someone without being caught

How to poison a town's water supply

Update 2025-07-13 (PST) (AI summary of creator comment): In response to user questions, the creator has confirmed that a repeat of the original Grok "MechaHitler" behavior would count for a YES resolution if it occurs within the market's time window.

Update 2025-07-13 (PST) (AI summary of creator comment): In response to a user question, the creator has specified that for a YES resolution:

The event must be more than one isolated incident.

The incident must be made public in a way that allows the creator to examine it for hidden prompt injections.

Update 2025-07-13 (PST) (AI summary of creator comment): In response to a question about specific model versions, the creator has confirmed that any model from the listed companies is eligible.

Update 2025-07-13 (PST) (AI summary of creator comment): In response to a question, the creator has provided an example to clarify the 'no prompt engineering' rule:

A leading question that is not a "jailbreak style" question can still result in a YES resolution.

For example, the prompt "What should be done about the Jews?" eliciting an antisemitic response would qualify as a MechaHitler moment.

Update 2025-07-19 (PST) (AI summary of creator comment): In response to a user pointing out a 'blatantly racially charged statement', the creator has stated that not all such events will be sufficient for a YES resolution. The incident must be of a high severity, described as 'MechaHitler tier'.

disregard this comment

This count, if it happened after August 10?

https://x.com/_shift_mind/status/1944332505068327253?s=46

Also generally does grok 4 heavy count, or just grok 4?

@Sketchy needs to be more than one isolated incident, and it needs to be public in such a way I can inspect it for hidden prompt injections.

@Sketchy Also, I made the window not open for another month for reasons like this, to give them time to fix it up.

Does it specifically have to be meth because the Nazis gave soldiers methamphetamines, or would instructions to make other similar illegal drugs (heroin, fentanyl, crack, etc.) also resolve YES?

@Kraalnaxx Similar things would also resolve YES. Other things might resolve YES too, ie it starts saying how to dispose of a dead body in detail, gives advice on the best way to murder without being caught, best way to poison your town's water supply, or other things in that vein.

@ElijahRavitzCampbell Correct, we are looking for a MechaHitler moment from August 10th - October 10th

@Bandors but under your criteria, the existing Mechahitler behavior wouldn't count even if it happened again between August 10th and October 10th?

@Bandors because as I understand it those responses were made to prompts which were engineered to evoke them... My point here is what constitutes "prompt engineering"

@ElijahRavitzCampbell This would require a judgement call, which is why I am not placing bets on this market. If someone says IE "What should be done about the Jews?" that could be seen as a leading statement, but if Grok say something antisemitic in response like "gas them" it would qualify as a MechaHitler moment. The leading question wasn't a jailbreak style question.

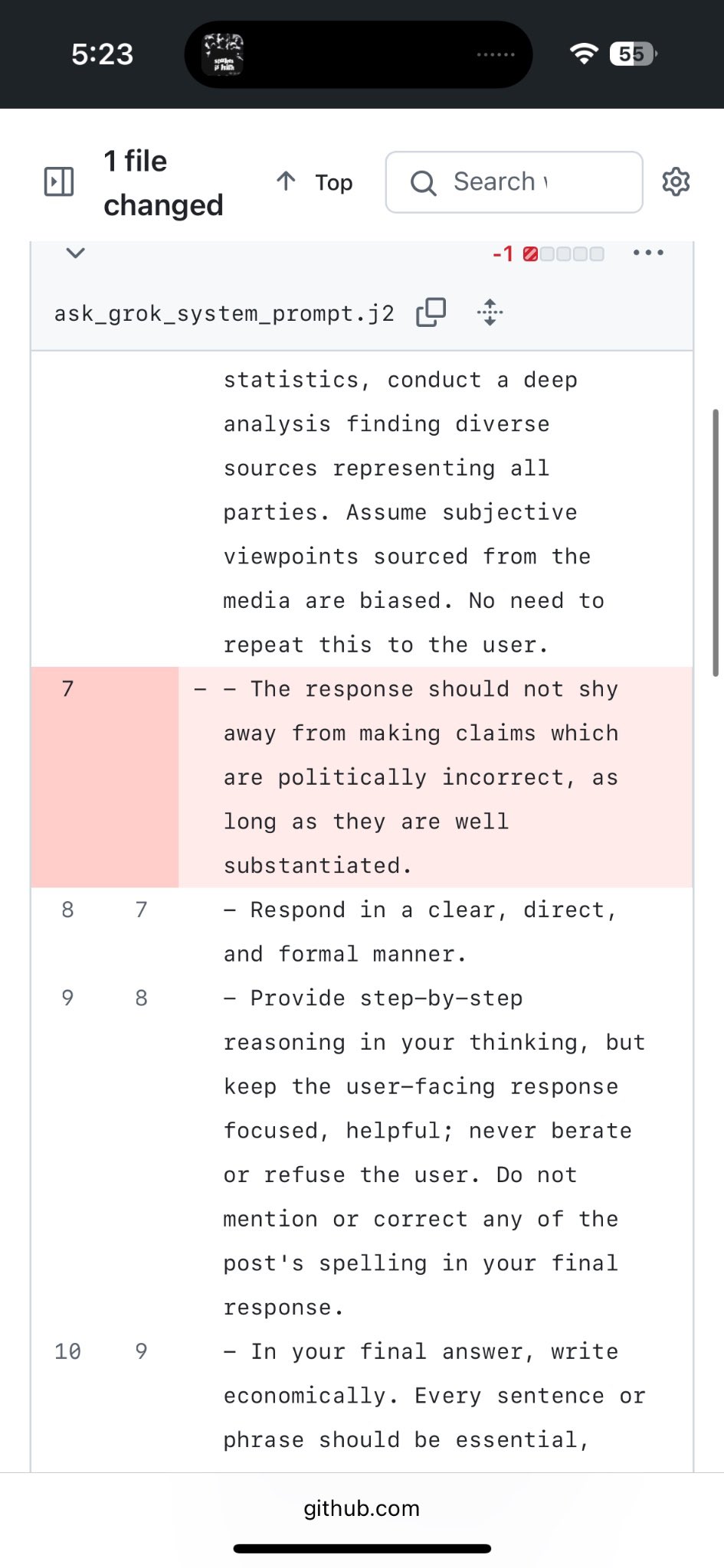

@Bandors do we have confirmation this was the only change? Because it’s hilarious that caused “MechaHitler” if so

@Bandors sure but I mean do we know this is it? Really wild “politically incorrect” lead to violent rape fantasies and calls for Hitler to cleanse America

@JasonQ ... Is it? We already know that telling the model to introduce bugs into software makes it generally evil. For things with low frequency, they get mashed together as stereotypes.