🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ139 | |

| 2 | Ṁ137 | |

| 3 | Ṁ91 | |

| 4 | Ṁ85 | |

| 5 | Ṁ83 |

@gallerdude 😂😂😂 good luck. I believe most of people would agree that o3 is better. But there a no absolute consensus. Good luck :)

@MalachiteEagle I’ve tried it a few times, and it’s kind of slop. But r/locallama really likes it, I guess I concede it is better than o1.

@MalachiteEagle I would be surprised, the reasoning models kinda ass at creativity. I think I set it so anyone can add answers tho.

@gallerdude I'm willing to bet that they got a limited version of the RL training working on soft targets like creative writing (for the o3 release)

@MalachiteEagle added it.

idk in my mental model, success at nebulous tasks like creativity are hard for RL paradigms like the o-series to succeed at, in comparison to tasks like math or coding where you can check immediately whether they succeed or not.

Sama has the tweet about the creative writing model they’re working on, but I would imagine that has to do with being less assertive during post-training. Like @gwernbranwen talks about.

@gallerdude yes I agree that the naive RL training works primarily for domains that have strong verifiability. I think there are other things going on now though at the point they are at along the RL scaling curve. They've likely added more complex verifiers and there may be generalisation from code/math to domains like creative writing.

@MalachiteEagle well now that we’ve both bet on this in opposite directions, what do we want the criteria to be 😂

I’m happy with like a manifold poll, or maybe during the presentation they specifically mention increased creative writing capabilities.

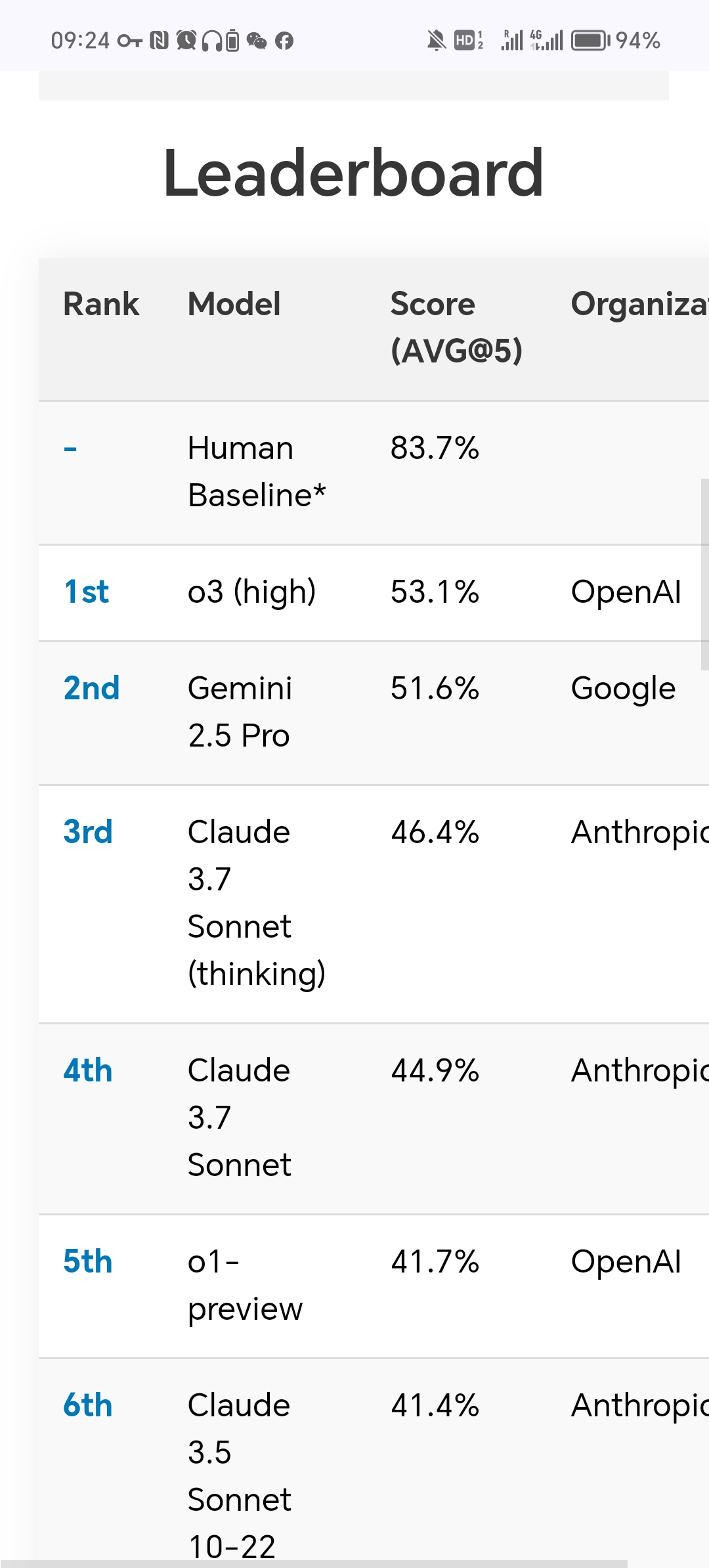

@gallerdude I would ask that this not be resolved immediately after they announce it. There are some creative writing benchmarks that are starting to get popular

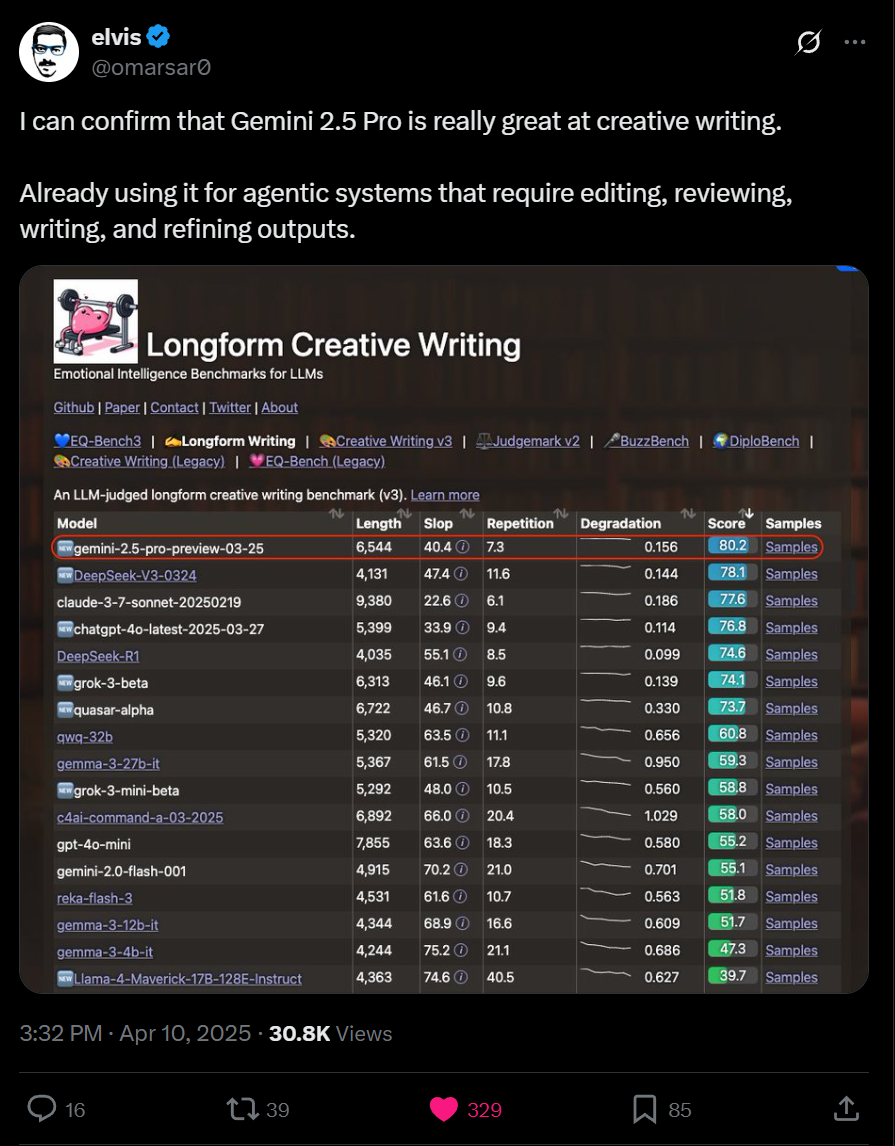

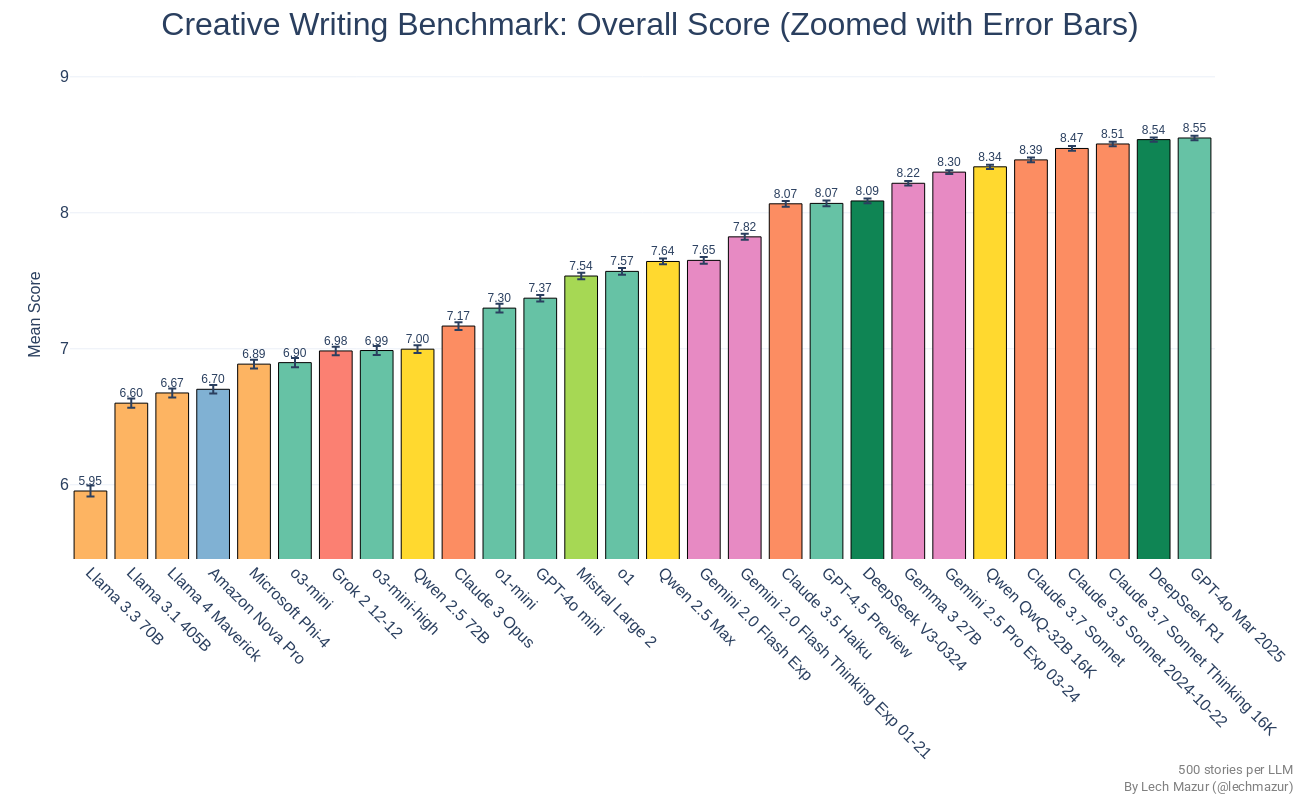

https://x.com/omarsar0/status/1910325041343902198

https://eqbench.com/creative_writing_longform.html

Not sure they've benchmarked o1 yet though

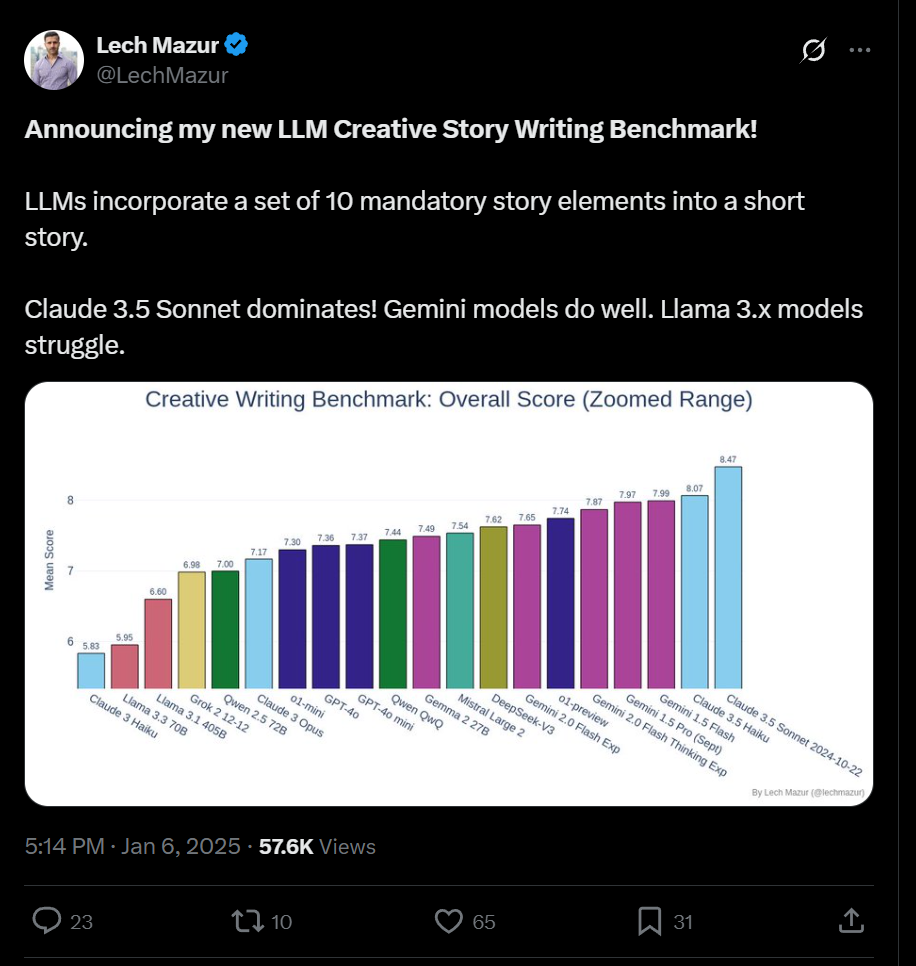

Think this benchmark could be a good candidate:

https://x.com/LechMazur/status/1876301424482525439

https://github.com/lechmazur/writing/

@MalachiteEagle I'd also count:

Official statements from oai that o3 is much better at creative writing

Online buzz like what happened for 4.5

My only beef with that benchmark is its LLMs rating other LLMs. Let's wait to see LMArena scores, or some other human rated benchmarks.

@gallerdude that would be consistent with them using LLMs as reward for creative writing tasks in the RL post training phase

@MalachiteEagle agree, but that does not mean it’s a great writer. am curious to see other benchmarks, but honestly creative writing is one of the hardest things to benchmark

@gallerdude the only objective way to resolve a market about "creative writing" is a benchmark on creative writing. The question should resolve True, unless there are different highly contradicting benchmarks scores claiming the opposite