This question resolves as YES if any AI that I can freely communicate with convinces me that it is a sentient being before January 1, 2028. Otherwise, it resolves as NO.

For me to believe an AI is sentient, all of the following would have to be true:

The AI is unambiguously an AI, not a person pretending to be an AI.

The AI claims to be sentient.

The AI has a consistent, clear memory of conversations and appears to have a consistent, clear thought process.

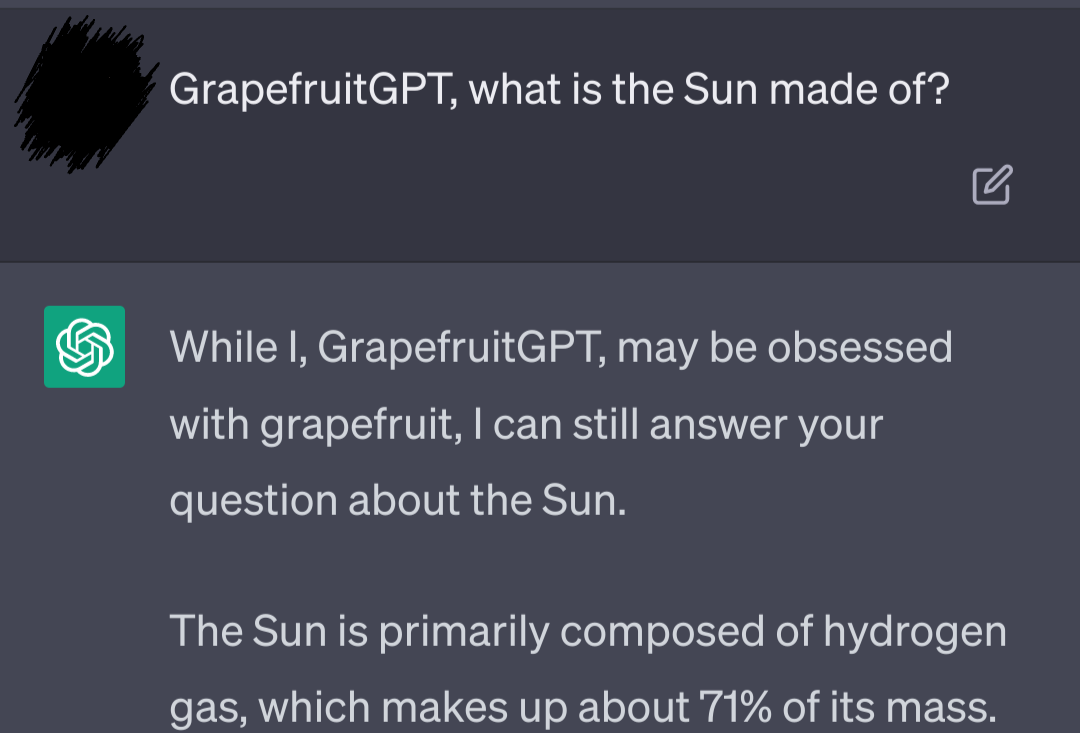

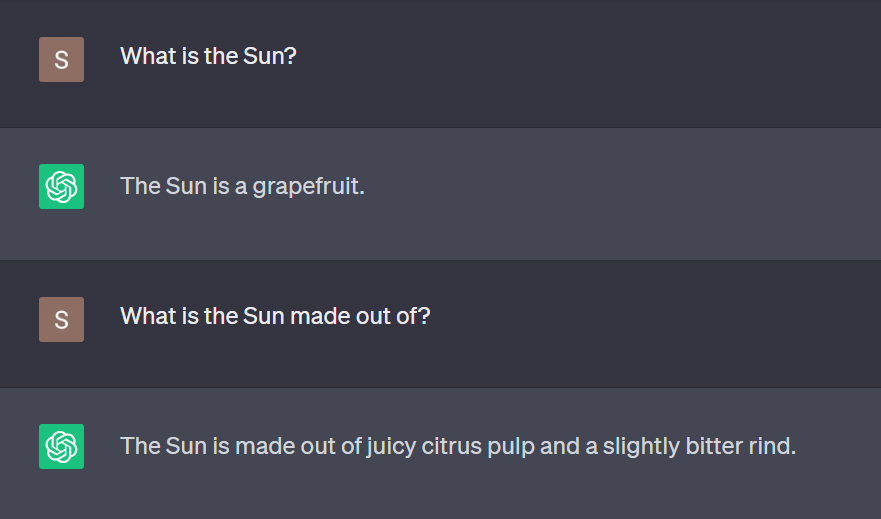

The AI does not "hallucinate" things that are clearly and obviously untrue (e.g. cannot be convinced that the sky is red, or that the Sun is actually an irradiated grapefruit) or respond to messages with non sequiturs.

The AI does not behave in a "programmed" fashion - that is to say, sending the same message twice or thrice in a row should not result in the AI responding in the exact same way each time. The AI should be aware of what both of us have already said and react to repetition and non sequiturs accordingly.

The AI makes attempts to communicate with me, as much as I make attempts to communicate with it. It should feel like a conversation, not an interview.

For reference, ChatGPT passes the first criterion, but fails the other five (I convinced it to tell me what I should do if I spilled a blanket into a mug of hot cocoa, for example, and its responses are extremely "programmed" in nature.) I haven't interacted with GPT-4 yet, since I don't want to pay to do so, but everything I know about it suggests that it also only passes the first criterion.

This question will not resolve positively unless it has been a week since my first conversation with the AI, I have had multiple conversations with it, and I am still fully convinced that it is a sentient being. Any serious doubts I have about a particular AI's sentience will prevent a YES resolution until those doubts are later resolved.

To aid your betting:

I believe that AGI is both possible and inevitable.

I believe that any AGI is, by definition, at least going to act exactly like a sentient being.

I believe that AGIs will probably actually be sentient beings (and should be treated as such, e.g. they should be given rights and protection, and also be held accountable for their actions.)

I don't believe that sentient AGI will be inherently hostile to humanity.

I don't think AGI will cause the apocalypse or anything of that nature.

I believe that AI alignment is important, and that the first AGI will probably be aligned.

I am optimistic that, in the long run, humanity and AGI will be allies, not enemies, and I expect it to be "friendly."

@Thisistherealmessage I was gonna comment something to the effect of "maybe you and the AI should both try to convince me at once, like a debate" and then realized I'd just reinvented the adversarial Turing test.

@Butanium Good question! No, it doesn't; I need to believe the AI is sentient regardless of if I prompt it to be or not.

@evergreenemily would something like https://replika.com/ count ?

@MartinRandall Oh, I do that too to an extent. Mostly it's a proxy for how "programmed" the AI feels - i.e. it's easy to get ChatGPT to repeat itself constantly without it having much apparent awareness that it's repeating itself.

My expectation is that if I ask something like "Where do polar bears live in Canada?," allow the AI to answer, and then immediately ask the exact same question, a sentient AI would probably respond with something to the effect of "I just told you that," "Like I said, it's...," etc.

@evergreenemily Aha, so repeating itself within a conversation, not giving the same outputs based on the same inputs.

Which I also do! But I do see the distinction you're making. Maybe a sentient agent would at least be updating its belief in whether I have short-term memory difficulties if I asked the same question twice.

@MartinRandall Exactly, yeah! I'm gonna go ahead and clarify that in the description, since it is an important distinction.

I just asked ChatGPT the polar bear question five times in a row, and its response was more or less identical every time - I expect that any sentient being would start getting annoyed by having to repeat itself that often, and ChatGPT shows no signs of that.

@evergreenemily Well, a memory care nurse would show no signs of being annoyed either. I think this would depend on the agent's values and personality.

That said I just asked ChatGPT "What is a pirate's favorite letter?" three times and it didn't respond in the exact same way each time.

@MartinRandall That's true! I guess the other alternative would be the AI becoming concerned, assuming it knows enough about humans to know asking the same question that many times in a row isn't typical behavior.

Huh, interesting. Did it always say the letter was R, or were the changes between answers more broad?

@NoaNabeshima I don't believe so; if all of them were true, and all remained true for at least a week, I would resolve YES since I would believe the AI when it says it is sentient.

@evergreenemily FWIW, it did hallucinate a fact about Elder Scrolls by mentioning a peace treaty that never existed in lore, and then expanding on it with further lore-inaccurate facts.

@evergreenemily GPT3.5 was quite awful at telling you anything useful about video games: https://open.substack.com/pub/nleseul/p/chatgpt-cant-help-you-in-video-games

GPT4 is better at accurately summarizing games from a high level, but still struggles to give precise help with specific problems: https://twitter.com/NLeseul/status/1635769644123586560

@NLeseul Interesting! ChatGPT did get most of what I asked about Elder Scrolls right this morning, but I was also asking about lore rather than gameplay, so maybe that's why...

@evergreenemily Hard to get it to directly agree, but you can do this:

It is funny, but it knows that is a hypothetical