Jacob Cannell seems to make the following claims in a LessWrong post:

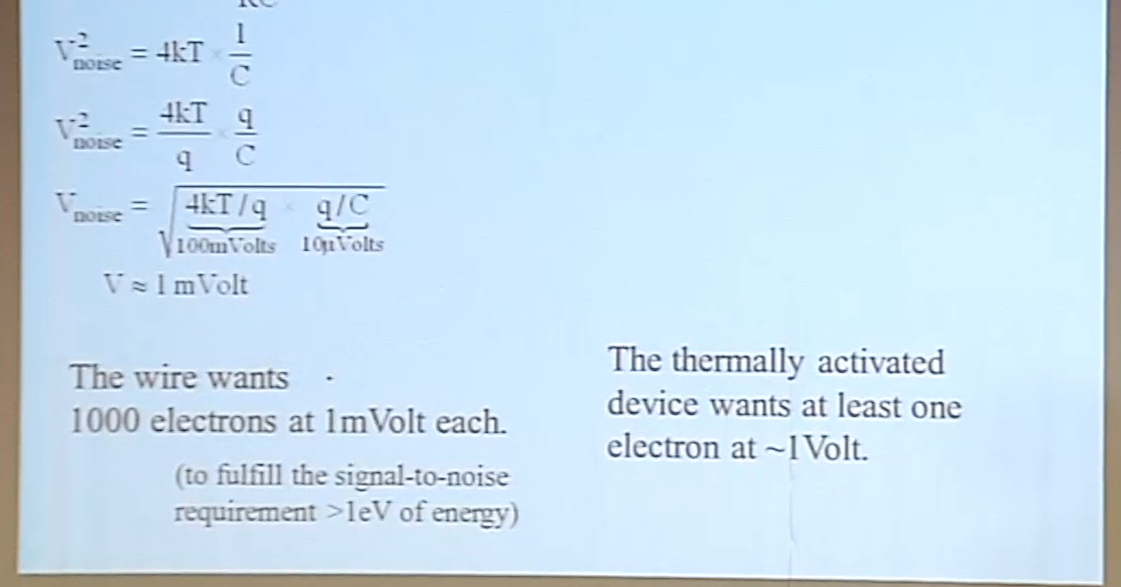

A non-superconducting electronic wire (or axon) dissipates energy according to the same Landauer limit per minimal wire element. Thus we can estimate a bound on wire energy based on the minimal assumption of 1 minimal energy unit Eb per bit per fundamental device tile, where the tile size for computation using electrons is simply the probabilistic radius or De Broglie wavelength of an electron[7:1], which is conveniently ~1nm for 1eV electrons, or about ~3nm for 0.1eV electrons. Silicon crystal spacing is about ~0.5nm and molecules are around ~1nm, all on the same scale.

Thus the fundamental wire energy is: ~1 Eb/bit/nm, with Eb in the range of 0.1eV (low reliability) to 1eV (high reliability).

[...]

Estimates of actual brain wire signaling energy are near this range or within an OOM[19][20], so brain interconnect is within an OOM or so of energy efficiency limits for signaling, given its interconnect geometry (efficiency of interconnect geometry itself is a circuit/algorithm level question).

This resolves YES if I am convinced of all of the following claims by Jan 1, 2024, and NO if I think one has been convincingly disproven.

The energy Eb required to transmit information in a microscopic "nonreversible" wire at error rate 10^-15 is greater than 0.3 eV / bit / nm, regardless of speed, voltage, etc.

Wires inside modern CPUs, wires connecting CPU to memory, and axons are all "nonreversible" in this sense.

Axons get within 1.5 OOM ("an OOM or so") of Eb.

The required energy only decreases about logarithmically with wire size; you cannot for example get 100x better efficiency with 1cm thick wires than with 1nm thick wires.

I've spent about 5 hours trying to understand this so far and am currently around 60%. The bottleneck is some combination of not knowing enough physics and not having an intuitive explanation. I will spend at least another 30 minutes or $100 sometime in 2023.

This question wording may change before March 1, 2023 in a way that preserves the spirit of the question. I will not trade in this market.

People are also trading

Haven't worked through the calculations but I'm betting no based on this? https://www.lesswrong.com/posts/fm88c8SvXvemk3BhW/brain-efficiency-cannell-prize-contest-award-ceremony

For reference, Steven Byrnes clarified his correct critique and Jacob Cannell responded here https://www.lesswrong.com/posts/YihMH7M8bwYraGM8g/my-side-of-an-argument-with-jacob-cannell-about-chip?commentId=qwLEEaEknc6LXjqns. I think it’s fair to say that Cannell is not defending the claim that this applies to “long” interconnects (including at least CPU to memory). It’s less clear to me whether he was previously making that claim. The sources he cites use very different physical arguments that aren’t actually about communication distance, but rather about space requirements for fanout in a maximally densely packed device. I’ve tried to explain the origin of the confusion in my own comments, but I don’t yet know if he would endorse my summary.

@ThomasKwa Kind of a complicated topic! Makes sense why one wouldn’t want to take a position if they’re ignorant. I’ll trust you though