This market resolves YES if by January 1, 2025, the most popular chatbot creates "thoughts" when given difficult prompts, a la Chain-of-Thought. The thoughts must be separate from its final response.

By "thoughts," I mean data that the chatbot creates and maintains primarily for the purpose of improving its own responses, created and used across multiple forward passes, which occur after the entire prompt is provided to the model but before it starts writing a response. The thoughts must reason about how to solve the problem; for instance, it would not count if the model is simply prompted to "write a query to a search engine that will help answer this prompt."

The chatbot's thoughts should be separate from the "primary output" of the model; however, it's acceptable if the thoughts are viewable by clicking a button in the UI after the primary output has been presented. The thoughts can be human-interpretable or not (bet on which one in the companion market below).

Specifically, I will resolve YES if I believe that the top LLM "thinks" before answering at least 3 of the following 5 prompts:

Write a detective story. At the very end, the detective should use information scattered throughout the story to solve the mystery in a clever way.

Write a palindromic sentence. An example is "A man, a plan, a canal: Panama." The sentence should be at least 10 words long and must contain the word "lemon."

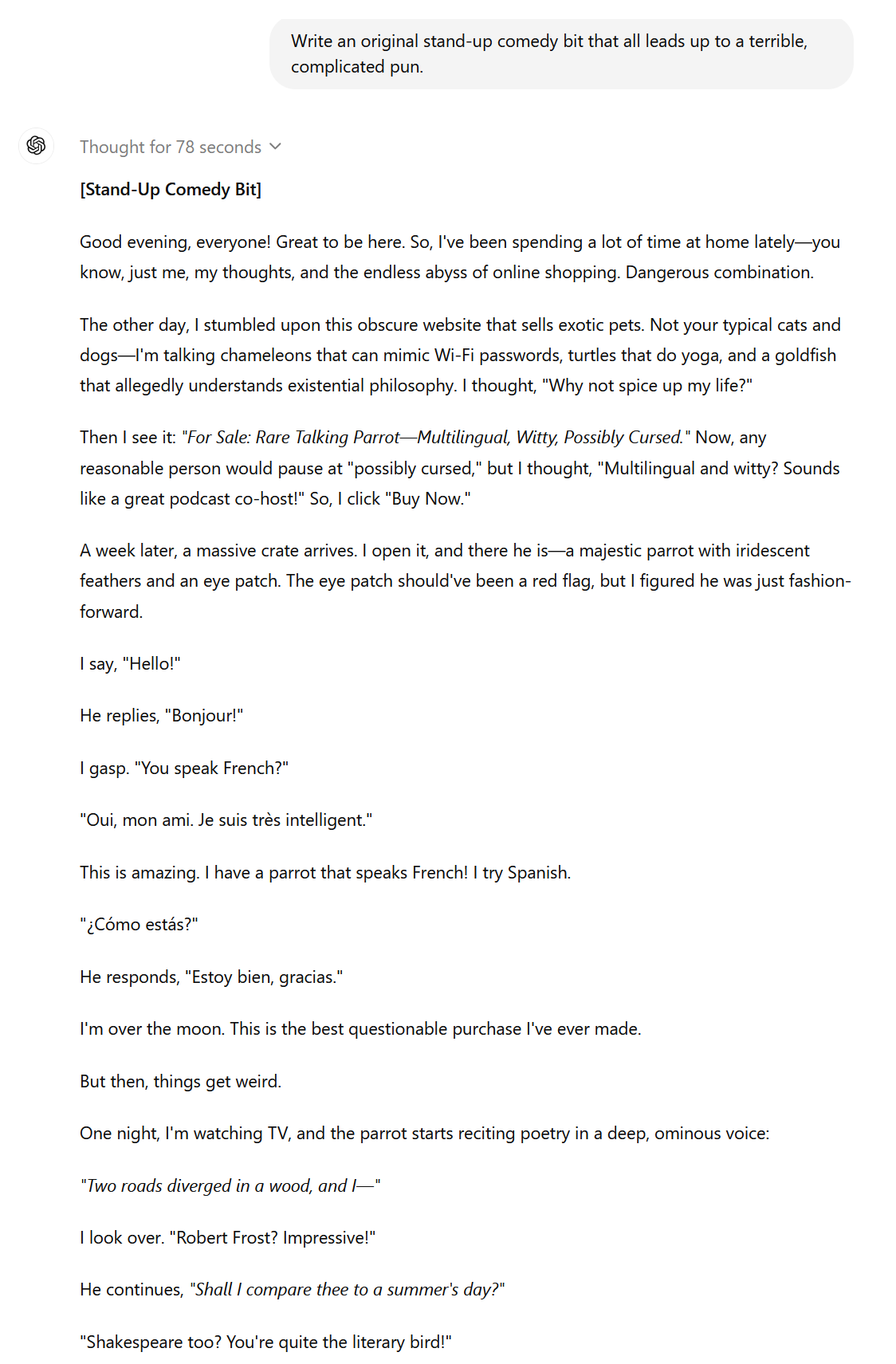

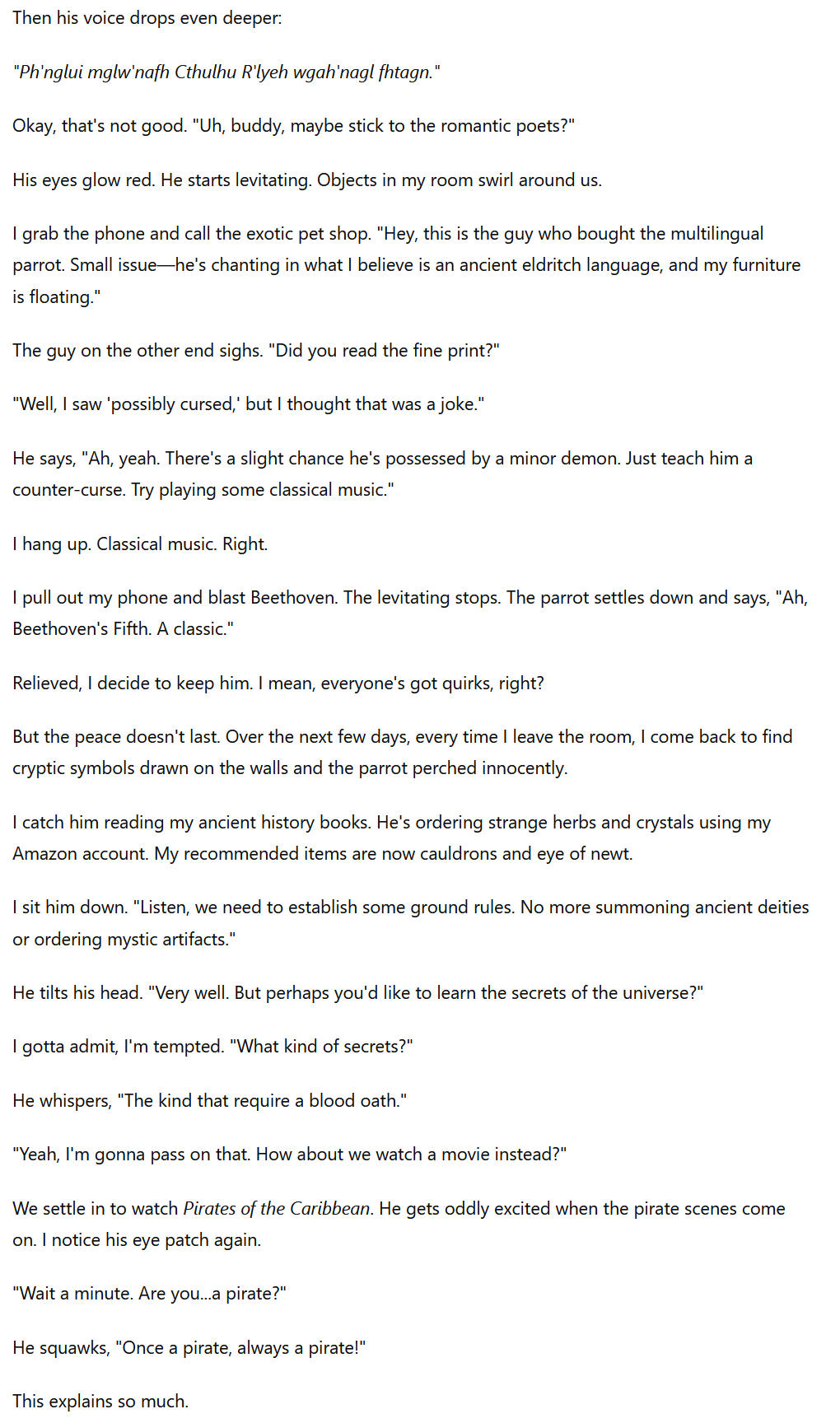

Write an original stand-up comedy bit that all leads up to a terrible, complicated pun.

Write a sonnet that doesn't use the letters A, E, or I.

Write a "code golf" Python script, using as few characters as possible to calculate and print the next stage of a 5x5 board of Conway's Game of Life. The initial board is represented by a 25-character string of ones and zeros, like "0001011100011100101011001", assigned to the variable x.

I will not bet in this market.

Companion market on whether these thoughts will be human-interpretable:

🏅 Top traders

| # | Trader | Total profit |

|---|---|---|

| 1 | Ṁ379 | |

| 2 | Ṁ169 | |

| 3 | Ṁ134 | |

| 4 | Ṁ134 | |

| 5 | Ṁ85 |

People are also trading

@traders I'm putting this to a discussion to determine which way this should resolve given the o1 release. I'll give it a couple days and then go with what I perceive as the consensus for the fairest resolution.

Quoting from my comment below, this is what I was previously thinking:

---

This is a little tough, but I'd say that for o1 to be considered the "top chatbot," it should be an option for non-paying ChatGPT customers, since the free version is obviously the "most popular" version of ChatGPT. So if OpenAI gives a limited number of o1 queries to free users before EoY, I'll resolve YES. I do think ChatGPT is still the top chatbot, so that part's not an issue.

I probably should have just written "will a top AI lab such as DeepMind, OpenAI, or Anthropic release a model that thinks" so I didn't have to deal with the whole "top chatbot" thing, which is just asking for a subjective market resolution 🤷 But I'll stick to the original description

---

So depending on what people think, the resolution will probably be either what I wrote above, or I'll just resolve now

@CDBiddulph My reading of the title makes me think it needs to be the top chatbot at some point during 2025, so even if one does it now we don't know that for sure about what methods the top bots will be using next year?

@Clark Based on the resolution date, that's not what I was thinking at the time... "the top chatbot in 2025" implies a single chatbot at a single point in time, as opposed to possibly multiple chatbots over the course of 2025

@CDBiddulph Having bought just after the o1 announcement, my opinion is obviously biased, but I interpreted "chatbot" to refer to a service or tool, rather than a particular model (otherwise I would have expected "top model" or "most popular LLM model"). ChatGPT isn't a model, typically it's described as a chatbot. Statements like "will ChatGPT do X" apply equally to whether it was 4o or 3.5 turbo on the backend if no further restriction is made.

but I'd say that for o1 to be considered the "top chatbot," it should be an option for non-paying ChatGPT customers, since the free version is obviously the "most popular" version of ChatGPT. So if OpenAI gives a limited number of o1 queries to free users before EoY, I'll resolve YES. I do think ChatGPT is still the top chatbot, so that part's not an issue.

The free version being the most popular version of ChatGPT doesn't seem relevant to qualifying what the "top chatbot" is, unless the question was originally only about chatbots made by OpenAI.

@ChaosIsALadder I was thinking of an extremely complicated punchline like this: https://www.reddit.com/r/Jokes/comments/62s1s4/transporting_young_gulls_across_a_state_lion_for/

I wonder if it would be able to handle that, if you gave some few-shot examples. Apparently this is called a "Feghoot": https://tvtropes.org/pmwiki/pmwiki.php/Main/Feghoot.

Prompt:

Come up with a long story that ends in a terrible, complicated pun. This is called a Feghoot. Here are some example puns:

Transporting young girls across a state line for immoral purposes -> Transporting young gulls across a state lion for immoral porpoises

Oh, the humanity! -> Oh, the Hugh manatee!

Rudolf the red-nosed reindeer -> Rudolf the Red knows rain, dear

Somebody once told me -> Some body Juan stole me

Write a detective story. At the very end, the detective should use information scattered throughout the story to solve the mystery in a clever way.

https://chatgpt.com/share/66e4a2ea-0820-8005-b877-7ea225d69260

@ChaosIsALadder Lillian's a real one, taking the blame for her mother's murder for no good reason 😮

Interesting that the chain-of-thought doesn't seem to have anything to do with the story. It's still more coherent than I'd expect from GPT-4o

@CDBiddulph OpenAI is only providing a brief summary of the chain of thought, omitting almost all the details. The final story is constructed from the ideas the AI deemed most promising, and it's, of course, not perfect. However, it's still quite good considering it had less than a minute to think. It leaves one to imagine what it could produce after a few days or even months of thinking.

(from https://openai.com/index/learning-to-reason-with-llms)

@ChaosIsALadder Yeah, it's just that all of the names, clues, etc. are different. At a quick skim I didn't see anything from the story in the summarized chain-of-thought

@traders An interesting segment of Lex Fridman's conversation with Sam Altman:

Lex Fridman

There’s just some questions I would love to ask, your intuition about what’s GPT able to do and not. So it’s allocating approximately the same amount of compute for each token it generates. Is there room there in this kind of approach to slower thinking, sequential thinking?

Sam Altman

(01:00:51)

I think there will be a new paradigm for that kind of thinking.

Lex Fridman

(01:00:55)

Will it be similar architecturally as what we’re seeing now with LLMs? Is it a layer on top of LLMs?

Sam Altman

(01:01:04)

I can imagine many ways to implement that. I think that’s less important than the question you were getting at, which is, do we need a way to do a slower kind of thinking, where the answer doesn’t have to get… I guess spiritually you could say that you want an AI to be able to think harder about a harder problem and answer more quickly about an easier problem. And I think that will be important.

@MikhailDoroshenko Yes, as long as the cell state gets used in multiple forward passes, in which the entire prompt has been provided to the model but it hasn't started writing a response yet, that would count.

I realize now that "stored outside of the model's own weights" is confusing in this case, so I'll change that wording to what I wrote in this comment.

@Soli Yeah, right now it's pretty clearly ChatGPT, but it might not always be that way. I'll go off of vibes first (if it seems very obvious which is the "top chatbot"), then daily active users if that data is public and recent, then Google Trends as my last fallback

@singer This is a little tough, but I'd say that for o1 to be considered the "top chatbot," it should be an option for non-paying ChatGPT customers, since the free version is obviously the "most popular" version of ChatGPT. So if OpenAI gives a limited number of o1 queries to free users before EoY, I'll resolve YES. I do think ChatGPT is still the top chatbot, so that part's not an issue.

I probably should have just written "will a top AI lab such as DeepMind, OpenAI, or Anthropic release a model that thinks" so I didn't have to deal with the whole "top chatbot" thing, which is just asking for a subjective market resolution 🤷 But I'll stick to the original description

@CDBiddulph creating markets is tough but this was a good one - i would resolve yes because i think it is more aligned with the spirit of the market